Stephan Sigg

- Aalto University

- Maarintie 8

- 00076 Espoo

- Finland

- +358 (0)50-4666941

- stephan.sigg@aalto.fi (PGP public key)

Stephan's research interests include the design, analysis and optimisation of algorithms for distributed and ubiquitous sensing systems. He has addressed research questions related to context prediction, collaborative transmission in Wireless sensor networks, context-based secure key generation, device-free passive activity recognition and computation of functions in wireless networks at the time of transmission.

Publications

other: Semantic Scholar Google Scholar, Research Gate, DBLP

Excellent! Next you can

create a new website with this list, or

embed it in an existing web page by copying & pasting

any of the following snippets.

JavaScript

(easiest)

PHP

iFrame

(not recommended)

<script src="https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Sigg&jsonp=1"></script>

<?php

$contents = file_get_contents("https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Sigg");

print_r($contents);

?>

<iframe src="https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Sigg"></iframe>

For more details see the documention.

This is a preview! To use this list on your own web site

or create a new web site from it,

create a free account. The file will be added

and you will be able to edit it in the File Manager.

We will show you instructions once you've created your account.

To the site owner:

Action required! Mendeley is changing its API. In order to keep using Mendeley with BibBase past April 14th, you need to:

- renew the authorization for BibBase on Mendeley, and

- update the BibBase URL in your page the same way you did when you initially set up this page.

2025

(1)

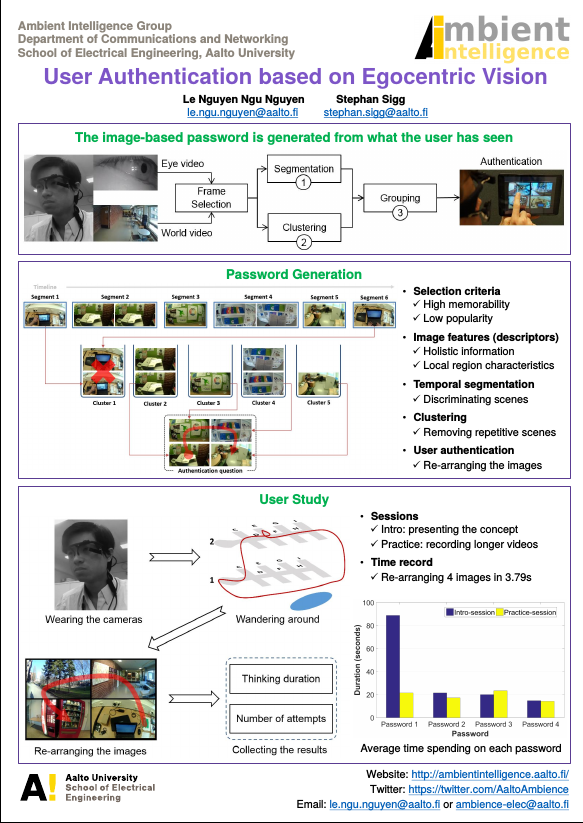

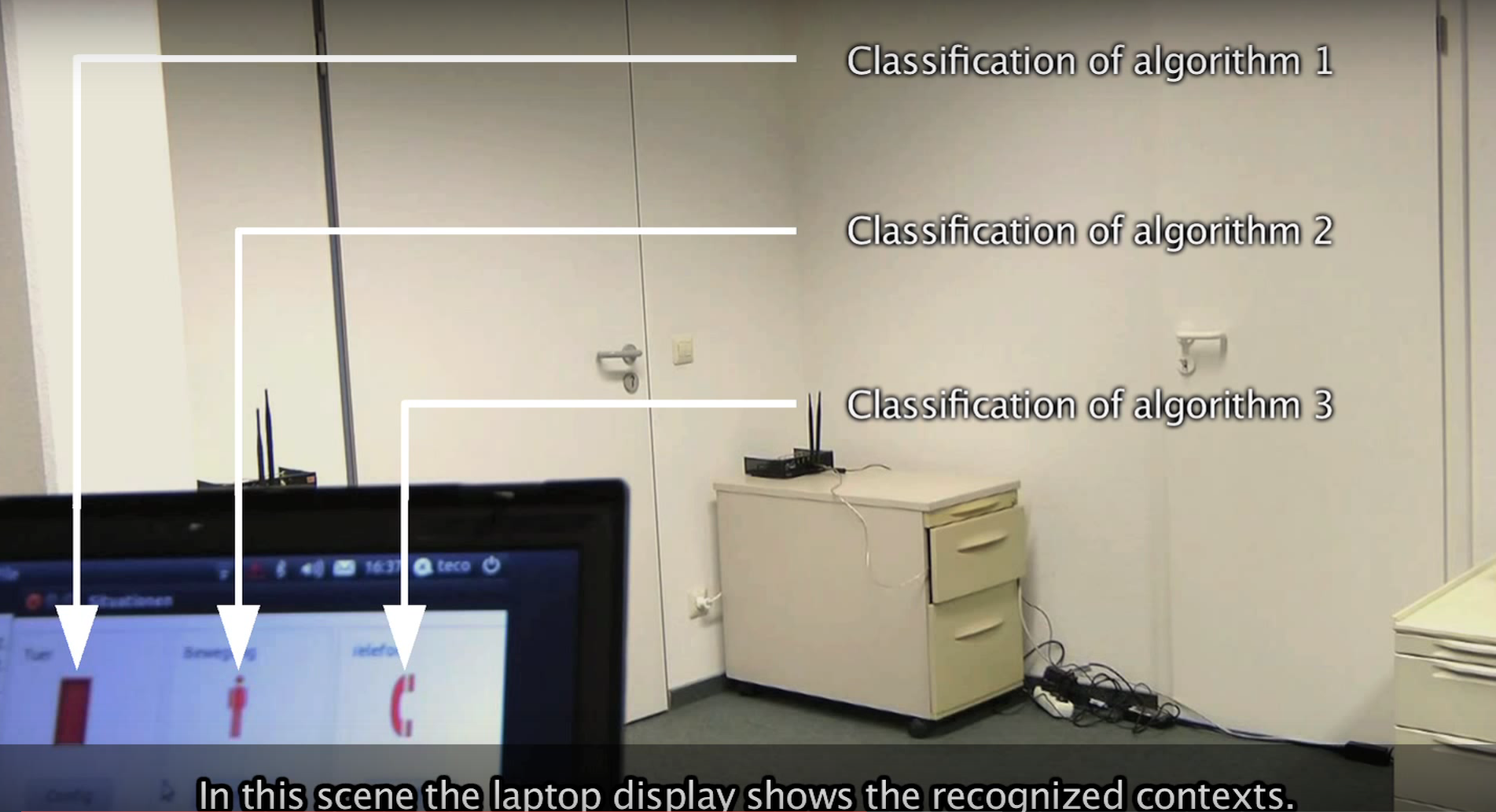

Transient Authentication from First-Person-View Video.

Nguyen, L. N.; Findling, R. D.; Poikela, M.; Zuo, S.; and Sigg, S.

Proc. ACM Interact. Mob. Wearable Ubiquitous Technol., 9(1). March 2025.

![Paper Transient Authentication from First-Person-View Video [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

3 downloads

Paper

doi

link

bibtex

abstract

3 downloads

![Paper Transient Authentication from First-Person-View Video [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

3 downloads

Paper

doi

link

bibtex

abstract

3 downloads

@article{10.1145/3712266,

author = {Nguyen, Le Ngu and Findling, Rainhard Dieter and Poikela, Maija and Zuo, Si and Sigg, Stephan},

title = {Transient Authentication from First-Person-View Video},

year = {2025},

issue_date = {March 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {9},

number = {1},

url = {https://doi.org/10.1145/3712266},

doi = {10.1145/3712266},

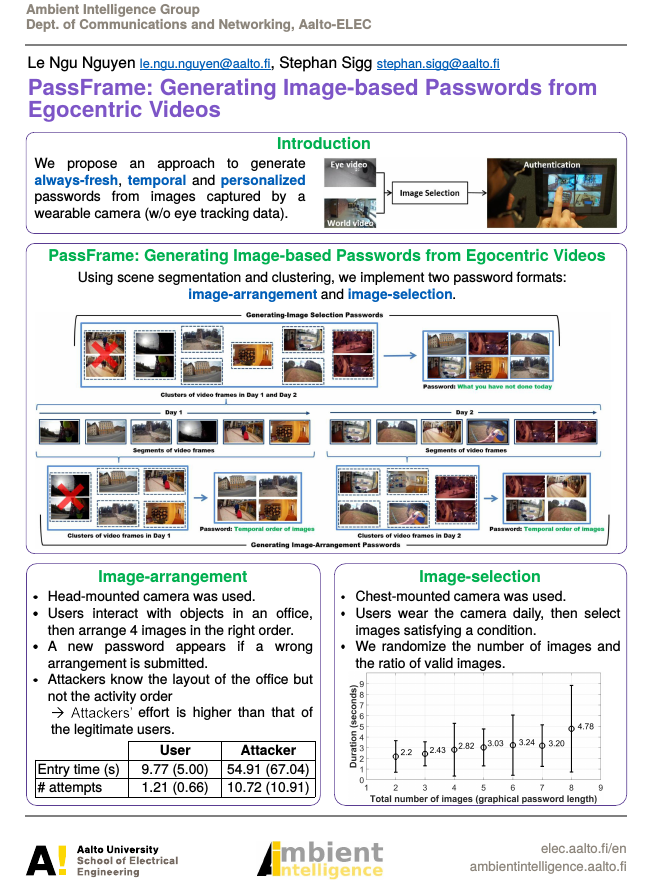

abstract = {We propose PassFrame, a system which utilizes first-person-view videos to generate personalized authentication challenges based on human episodic memory of event sequences. From the recorded videos, relevant (memorable) scenes are selected to form image-based authentication challenges. These authentication challenges are compatible with a variety of screen sizes and input modalities. As the popularity of using wearable cameras in daily life is increasing, PassFrame may serve as a convenient personalized authentication mechanism to screen-based appliances and services of a camera wearer. We evaluated the system in various settings including a spatially constrained scenario with 12 participants and a deployment on smartphones with 16 participants and more than 9 hours continuous video per participant. The authentication challenge completion time ranged from 2.1 to 9.7 seconds (average: 6 sec), which could facilitate a secure yet usable configuration of three consecutive challenges for each login. We investigated different versions of the challenges to obfuscate potential privacy leakage or ethical concerns with 27 participants. We also assessed the authentication schemes in the presence of informed adversaries, such as friends, colleagues or spouses and were able to detect attacks from diverging login behaviour.},

journal = {Proc. ACM Interact. Mob. Wearable Ubiquitous Technol.},

month = mar,

articleno = {14},

numpages = {31},

keywords = {Always-fresh challenge, First-person-view video, Shoulder-surfing resistance, Transient authentication challenge, Usable security, User authentication},

group = {ambience}}

%%% 2024 %%%

We propose PassFrame, a system which utilizes first-person-view videos to generate personalized authentication challenges based on human episodic memory of event sequences. From the recorded videos, relevant (memorable) scenes are selected to form image-based authentication challenges. These authentication challenges are compatible with a variety of screen sizes and input modalities. As the popularity of using wearable cameras in daily life is increasing, PassFrame may serve as a convenient personalized authentication mechanism to screen-based appliances and services of a camera wearer. We evaluated the system in various settings including a spatially constrained scenario with 12 participants and a deployment on smartphones with 16 participants and more than 9 hours continuous video per participant. The authentication challenge completion time ranged from 2.1 to 9.7 seconds (average: 6 sec), which could facilitate a secure yet usable configuration of three consecutive challenges for each login. We investigated different versions of the challenges to obfuscate potential privacy leakage or ethical concerns with 27 participants. We also assessed the authentication schemes in the presence of informed adversaries, such as friends, colleagues or spouses and were able to detect attacks from diverging login behaviour.

2024

(11)

A Paradigm Shift From an Experimental-Based to a Simulation-Based Framework Using Motion-Capture Driven MIMO Radar Data Synthesis.

Waqar, S.; Muaaz, M.; Sigg, S.; and Pätzold, M.

IEEE Sensors Journal, 24(10): 16614-16628. 2024.

doi link bibtex

doi link bibtex

@ARTICLE{10500314,

author={Waqar, Sahil and Muaaz, Muhammad and Sigg, Stephan and Pätzold, Matthias},

journal={IEEE Sensors Journal},

title={A Paradigm Shift From an Experimental-Based to a Simulation-Based Framework Using Motion-Capture Driven MIMO Radar Data Synthesis},

year={2024},

volume={24},

number={10},

pages={16614-16628},

keywords={Radar;Human activity recognition;MIMO radar;Radar antennas;Sensors;MIMO;Trajectory;Motion capture;Virtual reality;Deep learning;Aspect angle;data augmentation;data synthesis;deep learning;distributed multiple-input multiple-output (MIMO) radar simulation;human activity recognition (HAR);micro-Doppler analysis;motion capture (MoCap);motion synthesis;multiclass classification;virtual reality},

doi={10.1109/JSEN.2024.3386221},

group = {ambience}}

Angle-Agnostic Radio Frequency Sensing Integrated Into 5G-NR.

Salami, D.; Hasibi, R.; Savazzi, S.; Michoel, T.; and Sigg, S.

IEEE Sensors Journal, 24(21): 36099-36114. 2024.

doi link bibtex

doi link bibtex

@ARTICLE{10684085,

author={Salami, Dariush and Hasibi, Ramin and Savazzi, Stefano and Michoel, Tom and Sigg, Stephan},

journal={IEEE Sensors Journal},

title={Angle-Agnostic Radio Frequency Sensing Integrated Into 5G-NR},

year={2024},

volume={24},

number={21},

pages={36099-36114},

keywords={Sensors;Sidelink;Radar;Radio frequency;Point cloud compression;Millimeter wave communication;Device-to-device communication;Gesture recognition;new radio (NR) sidelink;point cloud;radio frequency (RF) communication and sensing convergence},

doi={10.1109/JSEN.2024.3459428},

group = {ambience}}

%%% 2023 %%%

RFID-based Human Activity Recognition Using Multimodal Convolutional Neural Networks.

Golipoor, S.; and Sigg, S.

In 2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA), pages 1-6, 2024.

doi link bibtex

doi link bibtex

@INPROCEEDINGS{10710698,

author={Golipoor, Sahar and Sigg, Stephan},

booktitle={2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA)},

title={RFID-based Human Activity Recognition Using Multimodal Convolutional Neural Networks},

year={2024},

volume={},

number={},

pages={1-6},

keywords={Torso;Accuracy;Neural networks;Feature extraction;Reflector antennas;Safety;Convolutional neural networks;Human activity recognition;RFID tags;Manufacturing automation;RFID;activity recognition;human-sensing;multimodal learning},

doi={10.1109/ETFA61755.2024.10710698},

group = {ambience}}

Environment and Person-independent Gesture Recognition with Non-static RFID Tags Leveraging Adaptive Signal Segmentation.

Golipoor, S.; and Sigg, S.

In 2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA), pages 1-8, 2024.

doi link bibtex

doi link bibtex

@INPROCEEDINGS{10710733,

author={Golipoor, Sahar and Sigg, Stephan},

booktitle={2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA)},

title={Environment and Person-independent Gesture Recognition with Non-static RFID Tags Leveraging Adaptive Signal Segmentation},

year={2024},

volume={},

number={},

pages={1-8},

keywords={Image segmentation;Adaptation models;Accuracy;Gesture recognition;Safety;RFID tags;Usability;Time-domain analysis;Random forests;Manufacturing automation;RFID;gesture recognition;human-sensing;sig-nal processing;signal segmentation},

doi={10.1109/ETFA61755.2024.10710733},

group = {ambience}}

EmotionAware 2024: Eighth International Workshop on Emotion Awareness for Pervasive Computing Beyond Traditional Approaches - Welcome and Committees.

David, K.; Dobbins, C.; Heinisch, J.; Okoshi, T.; Dupré, D.; Gaggioli, A.; Gao, N.; Peltonen, E.; Sugaya, M.; Tag, B.; Van Laerhoven, K.; Mariani, S.; and Sigg, S.

2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events, PerCom Workshops 2024,23–24. 2024.

2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events, PerCom Workshops 2024 ; Conference date: 11-03-2024 Through 15-03-2024

doi link bibtex

doi link bibtex

@article{d80aff910d284a788a9a368cb3e5e80f,

title = "EmotionAware 2024: Eighth International Workshop on Emotion Awareness for Pervasive Computing Beyond Traditional Approaches - Welcome and Committees",

author = "Klaus David and Chelsea Dobbins and Judith Heinisch and Tadashi Okoshi and Damien Dupr{\'e} and Andrea Gaggioli and Nan Gao and Ella Peltonen and Midori Sugaya and Benjamin Tag and {Van Laerhoven}, Kristof and Stefano Mariani and Stephan Sigg",

year = "2024",

doi = "10.1109/PerComWorkshops59983.2024.10502380",

language = "English",

pages = "23--24",

journal = "2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events, PerCom Workshops 2024",

note = "2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events, PerCom Workshops 2024 ; Conference date: 11-03-2024 Through 15-03-2024",

group = {ambience},

}

Direction-agnostic gesture recognition system using commercial WiFi devices.

Qin, Y.; Sigg, S.; Pan, S.; and Li, Z.

Computer Communications, 216: 34-44. 2024.

![Paper Direction-agnostic gesture recognition system using commercial WiFi devices [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

![Paper Direction-agnostic gesture recognition system using commercial WiFi devices [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

@article{QIN202434,

title = {Direction-agnostic gesture recognition system using commercial WiFi devices},

journal = {Computer Communications},

volume = {216},

pages = {34-44},

year = {2024},

issn = {0140-3664},

doi = {https://doi.org/10.1016/j.comcom.2023.12.033},

url = {https://www.sciencedirect.com/science/article/pii/S0140366423004747},

author = {Yuxi Qin and Stephan Sigg and Su Pan and Zibo Li},

keywords = {Channel state information, Direction-agnostic, Hand gesture recognition},

abstract = {In recent years, channel state information (CSI) has been used to recognize hand gestures for contactless human–computer interaction. However, most existing solutions require precision hardware or prior learning at the same angle both during training and for inference/training in order to achieve high recognition accuracy. This requirement is unrealistic for practical instrumentation, where the orientation of a subject relative to the RF receiver may be arbitrary. We present direction-agnostic hand gesture recognition utilizing commercial WiFi devices to overcome low accuracy in non-trained observation angles. To achieve equal conditions in all recognition angles, first of all, through the circular antenna arrangement to mitigate the impact of user direction changes. Then, the orientation of users is estimated by the Fresnel zone model. Finally, the feature mapping model of users in different orientations is established, and the gesture features in the estimated direction are mapped to the benchmark direction to eliminate the influence caused by the change of user orientation. Experimental results in a typical indoor environment show that WiNDR has superior performance, and the average recognition accuracy of five common gestures is 92.38\%.},

group = {ambience},

}

In recent years, channel state information (CSI) has been used to recognize hand gestures for contactless human–computer interaction. However, most existing solutions require precision hardware or prior learning at the same angle both during training and for inference/training in order to achieve high recognition accuracy. This requirement is unrealistic for practical instrumentation, where the orientation of a subject relative to the RF receiver may be arbitrary. We present direction-agnostic hand gesture recognition utilizing commercial WiFi devices to overcome low accuracy in non-trained observation angles. To achieve equal conditions in all recognition angles, first of all, through the circular antenna arrangement to mitigate the impact of user direction changes. Then, the orientation of users is estimated by the Fresnel zone model. Finally, the feature mapping model of users in different orientations is established, and the gesture features in the estimated direction are mapped to the benchmark direction to eliminate the influence caused by the change of user orientation. Experimental results in a typical indoor environment show that WiNDR has superior performance, and the average recognition accuracy of five common gestures is 92.38%.

Awareness in Robotics: An Early Perspective from the Viewpoint of the EIC Pathfinder Challenge ``Awareness Inside''.

Della Santina, C.; Corbato, C. H.; Sisman, B.; Leiva, L. A.; Arapakis, I.; Vakalellis, M.; Vanderdonckt, J.; D'Haro, L. F.; Manzi, G.; Becchio, C.; Elamrani, A.; Alirezaei, M.; Castellano, G.; Dimarogonas, D. V.; Ghosh, A.; Haesaert, S.; Soudjani, S.; Stroeve, S.; Verschure, P.; Bacciu, D.; Deroy, O.; Bahrami, B.; Gallicchio, C.; Hauert, S.; Sanz, R.; Lanillos, P.; Iacca, G.; Sigg, S.; Gasulla, M.; Steels, L.; and Sierra, C.

In Secchi, C.; and Marconi, L., editor(s), European Robotics Forum 2024, pages 108–113, Cham, 2024. Springer Nature Switzerland

link bibtex abstract

link bibtex abstract

@InProceedings{10.1007/978-3-031-76424-0_20,

author="Della Santina, Cosimo

and Corbato, Carlos Hernandez

and Sisman, Burak

and Leiva, Luis A.

and Arapakis, Ioannis

and Vakalellis, Michalis

and Vanderdonckt, Jean

and D'Haro, Luis Fernando

and Manzi, Guido

and Becchio, Cristina

and Elamrani, A{\"i}da

and Alirezaei, Mohsen

and Castellano, Ginevra

and Dimarogonas, Dimos V.

and Ghosh, Arabinda

and Haesaert, Sofie

and Soudjani, Sadegh

and Stroeve, Sybert

and Verschure, Paul

and Bacciu, Davide

and Deroy, Ophelia

and Bahrami, Bahador

and Gallicchio, Claudio

and Hauert, Sabine

and Sanz, Ricardo

and Lanillos, Pablo

and Iacca, Giovanni

and Sigg, Stephan

and Gasulla, Manel

and Steels, Luc

and Sierra, Carles",

editor="Secchi, Cristian

and Marconi, Lorenzo",

title="Awareness in Robotics: An Early Perspective from the Viewpoint of the EIC Pathfinder Challenge ``Awareness Inside''",

booktitle="European Robotics Forum 2024",

year="2024",

publisher="Springer Nature Switzerland",

address="Cham",

pages="108--113",

abstract="While consciousness has been historically a heavily debated topic, awareness had less success in raising the interest of scholars. However, more and more researchers are getting interested in answering questions concerning what awareness is and how it can be artificially generated. The landscape is rapidly evolving, with multiple voices and interpretations of the concept being conceived and techniques being developed. The goal of this paper is to summarize and discuss the ones among these voices connected with projects funded by the EIC Pathfinder Challenge ``Awareness Inside'' callwithin Horizon Europe, designed specifically for fostering research on natural and synthetic awareness. In this perspective, we dedicate special attention to challenges and promises of applying synthetic awareness in robotics, as the development of mature techniques in this new field is expected to have a special impact on generating more capable and trustworthy embodied systems.",

isbn="978-3-031-76424-0",

group = {ambience},}

While consciousness has been historically a heavily debated topic, awareness had less success in raising the interest of scholars. However, more and more researchers are getting interested in answering questions concerning what awareness is and how it can be artificially generated. The landscape is rapidly evolving, with multiple voices and interpretations of the concept being conceived and techniques being developed. The goal of this paper is to summarize and discuss the ones among these voices connected with projects funded by the EIC Pathfinder Challenge ``Awareness Inside'' callwithin Horizon Europe, designed specifically for fostering research on natural and synthetic awareness. In this perspective, we dedicate special attention to challenges and promises of applying synthetic awareness in robotics, as the development of mature techniques in this new field is expected to have a special impact on generating more capable and trustworthy embodied systems.

Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources.

Bañuelos, J. J.; Sigg, S.; He, J.; Salim, F.; and Costa-Requena, J.

In Proceedings of the 2nd International Workshop on Networked AI Systems, of NetAISys '24, pages 19–24, New York, NY, USA, 2024. Association for Computing Machinery

![Paper Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

2 downloads

Paper

doi

link

bibtex

abstract

2 downloads

![Paper Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

2 downloads

Paper

doi

link

bibtex

abstract

2 downloads

@inproceedings{10.1145/3662004.3663553,

author = {Ba\~{n}uelos, Juli\'{a}n Jer\'{o}nimo and Sigg, Stephan and He, Jiayuan and Salim, Flora and Costa-Requena, Jose},

title = {Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources},

year = {2024},

isbn = {9798400706615},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3662004.3663553},

doi = {10.1145/3662004.3663553},

abstract = {Missing sensor data in human activity recognition is an active field of research that is being targeted with generative models for synthetic data generation. In contrast to most previous approaches, we aim to generate data of a sensor exclusively from data available at sensors in different body locations. Particularly, we evaluate existing approaches proposed in the literature for their suitability in this scenario. In this paper, we focus on the prediction of acceleration data and generate machine learning models based on generative adversarial networks and trained using correlated data from sensors in different body positions to generate synthetic sensor data that can replace the missing data from a sensor in a specific body position. The accuracy of the generated synthetic data is evaluated using a classification model based on a convolutional neural network for human activity recognition.},

booktitle = {Proceedings of the 2nd International Workshop on Networked AI Systems},

pages = {19–24},

numpages = {6},

keywords = {accelerometer, cnn, gan, human activity recognition, iot, multivariate time series data, sensor data, synthetic data generation},

location = {Minato-ku, Tokyo, Japan},

series = {NetAISys '24},

group = {ambience}}

Missing sensor data in human activity recognition is an active field of research that is being targeted with generative models for synthetic data generation. In contrast to most previous approaches, we aim to generate data of a sensor exclusively from data available at sensors in different body locations. Particularly, we evaluate existing approaches proposed in the literature for their suitability in this scenario. In this paper, we focus on the prediction of acceleration data and generate machine learning models based on generative adversarial networks and trained using correlated data from sensors in different body positions to generate synthetic sensor data that can replace the missing data from a sensor in a specific body position. The accuracy of the generated synthetic data is evaluated using a classification model based on a convolutional neural network for human activity recognition.

Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources.

Bañuelos, J. J.; Sigg, S.; He, J.; Salim, F.; and Costa-Requena, J.

In Proceedings of the 2nd International Workshop on Networked AI Systems, of NetAISys '24, pages 19–24, New York, NY, USA, 2024. Association for Computing Machinery

![Paper Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

2 downloads

Paper

doi

link

bibtex

abstract

2 downloads

![Paper Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

2 downloads

Paper

doi

link

bibtex

abstract

2 downloads

@inproceedings{10.1145/3662004.3663553,

author = {Ba\~{n}uelos, Juli\'{a}n Jer\'{o}nimo and Sigg, Stephan and He, Jiayuan and Salim, Flora and Costa-Requena, Jose},

title = {Generating Multivariate Synthetic Time Series Data for Absent Sensors from Correlated Sources},

year = {2024},

isbn = {9798400706615},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3662004.3663553},

doi = {10.1145/3662004.3663553},

abstract = {Missing sensor data in human activity recognition is an active field of research that is being targeted with generative models for synthetic data generation. In contrast to most previous approaches, we aim to generate data of a sensor exclusively from data available at sensors in different body locations. Particularly, we evaluate existing approaches proposed in the literature for their suitability in this scenario. In this paper, we focus on the prediction of acceleration data and generate machine learning models based on generative adversarial networks and trained using correlated data from sensors in different body positions to generate synthetic sensor data that can replace the missing data from a sensor in a specific body position. The accuracy of the generated synthetic data is evaluated using a classification model based on a convolutional neural network for human activity recognition.},

booktitle = {Proceedings of the 2nd International Workshop on Networked AI Systems},

pages = {19–24},

numpages = {6},

keywords = {accelerometer, cnn, gan, human activity recognition, iot, multivariate time series data, sensor data, synthetic data generation},

location = {Minato-ku, Tokyo, Japan},

series = {NetAISys '24},

group = {ambience}}

Missing sensor data in human activity recognition is an active field of research that is being targeted with generative models for synthetic data generation. In contrast to most previous approaches, we aim to generate data of a sensor exclusively from data available at sensors in different body locations. Particularly, we evaluate existing approaches proposed in the literature for their suitability in this scenario. In this paper, we focus on the prediction of acceleration data and generate machine learning models based on generative adversarial networks and trained using correlated data from sensors in different body positions to generate synthetic sensor data that can replace the missing data from a sensor in a specific body position. The accuracy of the generated synthetic data is evaluated using a classification model based on a convolutional neural network for human activity recognition.

Towards Green Edge Intelligence.

Ben Cheikh, S.; and Sigg, S.

In Proceedings of the 13th International Conference on the Internet of Things, of IoT '23, pages 197–199, New York, NY, USA, 2024. Association for Computing Machinery

![Paper Towards Green Edge Intelligence [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

![Paper Towards Green Edge Intelligence [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

@inproceedings{10.1145/3627050.3630737,

author = {Ben Cheikh, Sami and Sigg, Stephan},

title = {Towards Green Edge Intelligence},

year = {2024},

isbn = {9798400708541},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3627050.3630737},

doi = {10.1145/3627050.3630737},

abstract = {This study presents our ongoing activities, along with a demonstration that showcases the integration of these endeavours into a real-world application. We demonstrate the integration of IoT devices with energy harvesting systems, as well as the incorporation of deep learning techniques into IoT devices. Finally, we consider the utilization of radio frequency (RF) technology for gesture detection and classification, based on deep learning algorithms.},

booktitle = {Proceedings of the 13th International Conference on the Internet of Things},

pages = {197–199},

numpages = {3},

keywords = {Cloud computing, E-Health, Edge intelligence, Internet of Things, Smart Cities, Wireless power transfer},

location = {Nagoya, Japan},

series = {IoT '23},

group = {ambience}}

This study presents our ongoing activities, along with a demonstration that showcases the integration of these endeavours into a real-world application. We demonstrate the integration of IoT devices with energy harvesting systems, as well as the incorporation of deep learning techniques into IoT devices. Finally, we consider the utilization of radio frequency (RF) technology for gesture detection and classification, based on deep learning algorithms.

An Application Programming Interface for Android to support dedicated 5G network slicing.

Jerónimo Bañuelos, J.; Costa-Requena, J.; He, J.; Salim, F.; and Sigg, S.

In Proceedings of the 13th International Conference on the Internet of Things, of IoT '23, pages 193–196, New York, NY, USA, 2024. Association for Computing Machinery

![Paper An Application Programming Interface for Android to support dedicated 5G network slicing [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

![Paper An Application Programming Interface for Android to support dedicated 5G network slicing [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

@inproceedings{10.1145/3627050.3630735,

author = {Jer\'{o}nimo Ba\~{n}uelos, Juli\'{a}n and Costa-Requena, Jose and He, Jiayuan and Salim, Flora and Sigg, Stephan},

title = {An Application Programming Interface for Android to support dedicated 5G network slicing},

year = {2024},

isbn = {9798400708541},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3627050.3630735},

doi = {10.1145/3627050.3630735},

abstract = {Recent technological advances in telecommunications networks such as the development and deployment of the fifth-generation technology standard for broadband cellular networks (5G) have enabled a wide array of new functionalities for mobile networks, also including for the first time, reduced capability (IoT) devices. The possibility to request on-demand specific features of the mobile network such as an improved reliability, faster broadband speed, or low latency depending on the type of content that is consumed by the application through 5G network slicing will allow to provide a better user experience under adequate network conditions. Provisioning devices with 5G network slicing capabilities is of great interest for manufacturers, carriers, and developers, particularly in IoT-type communication which may feature unique bandwidth and resource requirements. As a first step, this paper proposes an Application Programming Interface (API) to act as a bridge between an Android device and the 5G core network to provide Android-based devices with 5G network slicing functionality.},

booktitle = {Proceedings of the 13th International Conference on the Internet of Things},

pages = {193–196},

numpages = {4},

keywords = {5G, AOSP, API, android, mobile devices, network slicing, software interface},

location = {Nagoya, Japan},

series = {IoT '23},

group = {ambience}}

Recent technological advances in telecommunications networks such as the development and deployment of the fifth-generation technology standard for broadband cellular networks (5G) have enabled a wide array of new functionalities for mobile networks, also including for the first time, reduced capability (IoT) devices. The possibility to request on-demand specific features of the mobile network such as an improved reliability, faster broadband speed, or low latency depending on the type of content that is consumed by the application through 5G network slicing will allow to provide a better user experience under adequate network conditions. Provisioning devices with 5G network slicing capabilities is of great interest for manufacturers, carriers, and developers, particularly in IoT-type communication which may feature unique bandwidth and resource requirements. As a first step, this paper proposes an Application Programming Interface (API) to act as a bridge between an Android device and the 5G core network to provide Android-based devices with 5G network slicing functionality.

2023

(9)

A Joint Radar and Communication Approach for 5G NR using Reinforcement Learning.

Salami, D.; Ning, W.; Ruttik, K.; Jäntti, R.; and Sigg, S.

IEEE Communications Magazine, 61(5): 106-112. 2023.

doi link bibtex

doi link bibtex

@ARTICLE{10129041,

author={Salami, Dariush and Ning, Wanru and Ruttik, Kalle and Jäntti, Riku and Sigg, Stephan},

journal={IEEE Communications Magazine},

title={A Joint Radar and Communication Approach for 5G NR using Reinforcement Learning},

year={2023},

volume={61},

number={5},

pages={106-112},

keywords={Communication systems;5G mobile communication;Estimation;Radar;Interference;Reinforcement learning;Air traffic control},

doi={10.1109/MCOM.001.2200474},

group = {ambience}}

Water quality analysis using mmWave radars.

Salami, D.; Juvakoski, A.; Vahala, R.; Beigl, M.; and Sigg, S.

In 2023 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), pages 412-415, 2023.

doi link bibtex

doi link bibtex

@INPROCEEDINGS{10150256,

author={Salami, Dariush and Juvakoski, Anni and Vahala, Riku and Beigl, Michael and Sigg, Stephan},

booktitle={2023 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops)},

title={Water quality analysis using mmWave radars},

year={2023},

volume={},

number={},

pages={412-415},

keywords={Meteorological radar;Liquids;Radar detection;Radar;Water quality;Water pollution;Millimeter wave communication;mmWave radar;AI;ML;water quality analysis},

doi={10.1109/PerComWorkshops56833.2023.10150256},

group = {ambience}}

Detecting an Ataxia-Type Disease from Acceleration Data.

Kränzle, E.; and Sigg, S.

In 2023 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), pages 38-43, 2023.

doi link bibtex

doi link bibtex

@INPROCEEDINGS{10150335,

author={Kränzle, Eileen and Sigg, Stephan},

booktitle={2023 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops)},

title={Detecting an Ataxia-Type Disease from Acceleration Data},

year={2023},

volume={},

number={},

pages={38-43},

keywords={Pervasive computing;Correlation;Tracking;Conferences;Sensors;High frequency;Task analysis;Ataxia;Miras;IMU},

doi={10.1109/PerComWorkshops56833.2023.10150335},

group = {ambience}}

Fast converging Federated Learning with Non-IID Data.

Naas, S.; and Sigg, S.

In 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), pages 1-6, 2023.

doi link bibtex

doi link bibtex

@INPROCEEDINGS{10200108,

author={Naas, Si-Ahmed and Sigg, Stephan},

booktitle={2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring)},

title={Fast converging Federated Learning with Non-IID Data},

year={2023},

volume={},

number={},

pages={1-6},

keywords={Performance evaluation;Weight measurement;Federated learning;Computational modeling;Merging;Data models;Bayes methods;Federated learning;communication reduction;non-iid data;edge computing;fog networks;Internet of things},

doi={10.1109/VTC2023-Spring57618.2023.10200108},

group = {ambience}}

Accurate RF-sensing of complex gestures using RFID with variable phase-profiles.

Golipoor, S.; and Sigg, S.

In 2023 IEEE 32nd International Symposium on Industrial Electronics (ISIE), pages 1-4, 2023.

doi link bibtex

doi link bibtex

@INPROCEEDINGS{10228178,

author={Golipoor, Sahar and Sigg, Stephan},

booktitle={2023 IEEE 32nd International Symposium on Industrial Electronics (ISIE)},

title={Accurate RF-sensing of complex gestures using RFID with variable phase-profiles},

year={2023},

volume={},

number={},

pages={1-4},

keywords={Instruments;Pipelines;Laboratories;Signal processing;Solids;Skeleton;Reflection;RF-based human sensing;RFID},

doi={10.1109/ISIE51358.2023.10228178},

group = {ambience}}

Unsupervised statistical feature-guided diffusion model for sensor-based human activity recognition.

Zuo, S.; Rey, V. F.; Suh, S.; Sigg, S.; and Lukowicz, P.

arXiv preprint arXiv:2306.05285. 2023.

link bibtex

link bibtex

@article{zuo2023unsupervised,

title={Unsupervised statistical feature-guided diffusion model for sensor-based human activity recognition},

author={Zuo, Si and Rey, Vitor Fortes and Suh, Sungho and Sigg, Stephan and Lukowicz, Paul},

journal={arXiv preprint arXiv:2306.05285},

year={2023},

group = {ambience}}

Unsupervised Diffusion Model for Sensor-based Human Activity Recognition.

Zuo, S.; Fortes, V.; Suh, S.; Sigg, S.; and Lukowicz, P.

In Adjunct Proceedings of the 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing & the 2023 ACM International Symposium on Wearable Computing, of UbiComp/ISWC '23 Adjunct, pages 205, New York, NY, USA, 2023. Association for Computing Machinery

![Paper Unsupervised Diffusion Model for Sensor-based Human Activity Recognition [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

1 download

Paper

doi

link

bibtex

abstract

1 download

![Paper Unsupervised Diffusion Model for Sensor-based Human Activity Recognition [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

1 download

Paper

doi

link

bibtex

abstract

1 download

@inproceedings{10.1145/3594739.3610797,

author = {Zuo, Si and Fortes, Vitor and Suh, Sungho and Sigg, Stephan and Lukowicz, Paul},

title = {Unsupervised Diffusion Model for Sensor-based Human Activity Recognition},

year = {2023},

isbn = {9798400702006},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3594739.3610797},

doi = {10.1145/3594739.3610797},

abstract = {Recognizing human activities from sensor data is a vital task in various domains, but obtaining diverse and labeled sensor data remains challenging and costly. In this paper, we propose an unsupervised statistical feature-guided diffusion model for sensor-based human activity recognition. The proposed method aims to generate synthetic time-series sensor data without relying on labeled data, addressing the scarcity and annotation difficulties associated with real-world sensor data. By conditioning the diffusion model on statistical information such as mean, standard deviation, Z-score, and skewness, we generate diverse and representative synthetic sensor data. We conducted experiments on public human activity recognition datasets and compared the proposed method to conventional oversampling methods and state-of-the-art generative adversarial network methods. The experimental results demonstrate that the proposed method can improve the performance of human activity recognition and outperform existing techniques.},

booktitle = {Adjunct Proceedings of the 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing \& the 2023 ACM International Symposium on Wearable Computing},

pages = {205},

numpages = {1},

keywords = {Human activity recognition, Sensor data generation, Statistical feature-guided diffusion model, Unsupervised learning},

location = {Cancun, Quintana Roo, Mexico},

series = {UbiComp/ISWC '23 Adjunct},

group = {ambience}}

Recognizing human activities from sensor data is a vital task in various domains, but obtaining diverse and labeled sensor data remains challenging and costly. In this paper, we propose an unsupervised statistical feature-guided diffusion model for sensor-based human activity recognition. The proposed method aims to generate synthetic time-series sensor data without relying on labeled data, addressing the scarcity and annotation difficulties associated with real-world sensor data. By conditioning the diffusion model on statistical information such as mean, standard deviation, Z-score, and skewness, we generate diverse and representative synthetic sensor data. We conducted experiments on public human activity recognition datasets and compared the proposed method to conventional oversampling methods and state-of-the-art generative adversarial network methods. The experimental results demonstrate that the proposed method can improve the performance of human activity recognition and outperform existing techniques.

PPSFL: Privacy-preserving Split Federated Learning via Functional Encryption.

Ma, J.; Xixiang, L.; Yu, Y.; and Sigg, S.

. September 2023.

![Paper PPSFL: Privacy-preserving Split Federated Learning via Functional Encryption [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

Paper

doi

link

bibtex

![Paper PPSFL: Privacy-preserving Split Federated Learning via Functional Encryption [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

Paper

doi

link

bibtex

@article{Ma_2023,

title={PPSFL: Privacy-preserving Split Federated Learning via Functional Encryption},

url={http://dx.doi.org/10.36227/techrxiv.24082659.v1},

DOI={10.36227/techrxiv.24082659.v1},

publisher={Institute of Electrical and Electronics Engineers (IEEE)},

author={Ma, Jing and Xixiang, Lv and Yu, Yong and Sigg, Stephan},

year={2023},

month=sep,

group = {ambience}}

Introduction to the Special Issue on Wireless Sensing for IoT.

Ma, H.; He, Y.; Li, M.; Patwari, N.; and Sigg, S.

ACM Trans. Internet Things, 4(4). December 2023.

![Paper Introduction to the Special Issue on Wireless Sensing for IoT [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

![Paper Introduction to the Special Issue on Wireless Sensing for IoT [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

Paper

doi

link

bibtex

abstract

@article{10.1145/3633078,

author = {Ma, Huadong and He, Yuan and Li, Mo and Patwari, Neal and Sigg, Stephan},

title = {Introduction to the Special Issue on Wireless Sensing for IoT},

year = {2023},

issue_date = {November 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {4},

number = {4},

url = {https://doi.org/10.1145/3633078},

doi = {10.1145/3633078},

abstract = {ACM TIOT launched its first special issue on the theme of wireless sensing for IoT. As an important component of the special issue and a novel practice of the journal, an online virtual workshop will be held, with presentations for each of the accepted articles. Welcome to join us for online discussion! Free registration is required for an attendee of the workshop. The zoom link will be shared to registered attendees before the workshop.},

journal = {ACM Trans. Internet Things},

month = dec,

articleno = {21},

numpages = {4},

group = {ambience}}

%%% 2022 %%%

ACM TIOT launched its first special issue on the theme of wireless sensing for IoT. As an important component of the special issue and a novel practice of the journal, an online virtual workshop will be held, with presentations for each of the accepted articles. Welcome to join us for online discussion! Free registration is required for an attendee of the workshop. The zoom link will be shared to registered attendees before the workshop.

2022

(9)

CardioID: Secure ECG-BCG agnostic interaction-free device pairing.

Zuo, S.; Sigg, S.; Beck, N.; Jähne-Raden, N.; Wolf, M. C.; and others

IEEE Access, 10: 128682–128696. 2022.

link bibtex

link bibtex

@article{zuo2022cardioid,

title={CardioID: Secure ECG-BCG agnostic interaction-free device pairing},

author={Zuo, Si and Sigg, Stephan and Beck, Nils and J{\"a}hne-Raden, Nico and Wolf, Marie Cathrine and others},

journal={IEEE Access},

volume={10},

pages={128682--128696},

year={2022},

publisher={IEEE},

group = {ambience}}

Personalized Gestures Through Motion Transfer: Protecting Privacy in Pervasive Surveillance.

Zuo, S.; and Sigg, S.

IEEE Pervasive Computing, 21(4): 8–16. 2022.

link bibtex

link bibtex

@article{zuo2022personalized,

title={Personalized Gestures Through Motion Transfer: Protecting Privacy in Pervasive Surveillance},

author={Zuo, Si and Sigg, Stephan},

journal={IEEE Pervasive Computing},

volume={21},

number={4},

pages={8--16},

year={2022},

publisher={IEEE},

group = {ambience}}

Personalized Gestures Through Motion Transfer.

Zuo, S.; and Sigg, S.

. 2022.

link bibtex

link bibtex

@article{zuo2022personalized,

title={Personalized Gestures Through Motion Transfer},

author={Zuo, Si and Sigg, Stephan},

year={2022},

publisher={IEEE},

group = {ambience}}

Knowledge Sharing in AI Services: A Market-based Approach.

Mohammed, T.; Naas, S.; Sigg, S.; and Francesco, M. D.

IEEE Internet of Things Journal. 2022.

link bibtex

link bibtex

@article{ahmed2022,

title={Knowledge Sharing in AI Services: A Market-based Approach},

author={Thatha Mohammed and Si-Ahmed Naas and Stephan Sigg and Mario Di Francesco},

journal={IEEE Internet of Things Journal},

year={2022},

publisher={IEEE},

group = {ambience},

project = {redi},

group = {ambience}}

User Localization Using RF Sensing. A Performance Comparison between LIS and mmWave Radars.

Rubio, C. J. V.; Salami, D.; Popovski, P.; Carvalho, E. D.; Tan, Z.; and Sigg, S.

In EUSIPCO, 2022.

link bibtex

link bibtex

@inproceedings{Rubio22,

title={User Localization Using RF Sensing. A Performance Comparison between LIS and mmWave Radars},

author={Cristian Jesus Vaca Rubio and Dariush Salami and Petar Popovski and Elisabeth De Carvalho and Zheng-Hua Tan and Stephan Sigg},

booktitle={EUSIPCO},

year={2022},

group = {ambience},

project = {windmill},

group = {ambience}}

Tesla-rapture: A lightweight gesture recognition system from mmwave radar sparse point clouds.

Salami, D.; Hasibi, R.; Palipana, S.; Popovski, P.; Michoel, T.; and Sigg, S.

IEEE Transactions on Mobile Computing. 2022.

link bibtex

link bibtex

@article{salami2022tesla,

title={Tesla-rapture: A lightweight gesture recognition system from mmwave radar sparse point clouds},

author={Salami, Dariush and Hasibi, Ramin and Palipana, Sameera and Popovski, Petar and Michoel, Tom and Sigg, Stephan},

journal={IEEE Transactions on Mobile Computing},

year={2022},

publisher={IEEE},

group = {ambience},

project = {radiosense},

group = {ambience}}

Energy-Efficient Design for RIS-Assisted UAV Communications in Beyond-5G Networks.

Deshpande, A. A.; Rubio, C. J. V.; Mohebi, S.; Salami, D.; Carvalho, E. D.; Sigg, S.; Zorzi, M.; and Zanella, A.

In IEEE MedComNet, 2022.

link bibtex

link bibtex

@inproceedings{Anay2022RIS,

title={Energy-Efficient Design for RIS-Assisted UAV Communications in Beyond-5G Networks},

author={Anay Ajit Deshpande and Cristian Jesus Vaca Rubio and Salman Mohebi and Dariush Salami and Elisabeth De Carvalho and Stephan Sigg and Michele Zorzi and Andrea Zanella},

booktitle={IEEE MedComNet},

year={2022},

group = {ambience},

project = {windmill},

group = {ambience}}

Privacy-preserving federated learning based on multi-key homomorphic encryption.

Ma, J.; Naas, S.; Sigg, S.; and Lyu, X.

International Journal of Intelligent Systems. 2022.

link bibtex

link bibtex

@article{ma2022privacy,

title={Privacy-preserving federated learning based on multi-key homomorphic encryption},

author={Ma, Jing and Naas, Si-Ahmed and Sigg, Stephan and Lyu, Xixiang},

journal={International Journal of Intelligent Systems},

year={2022},

publisher={Wiley Online Library},

group = {ambience}}

Detection of an Ataxia-type disease from EMG and IMU sensors .

Dorszewski, T.; Jiang, W.; and Sigg, S.

In The 20th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct, 2022.

link bibtex

link bibtex

@inproceedings{,

title={Detection of an Ataxia-type disease from EMG and IMU sensors },

author={Tobias Dorszewski and Weixuan Jiang and Stephan Sigg},

booktitle={The 20th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct},

year={2022},

group = {ambience},

project = {MIRAS},

group = {ambience}}

%%% 2021 %%%

2021

(10)

Zero-shot Motion Pattern Recognition from 4D Point Clouds.

Salami, D.; and Sigg, S.

In IEEE 31st International Workshop on Machine Learning for Signal Processing (MLSP), 2021.

link bibtex

link bibtex

@InProceedings{Salami_2021_MLSP,

author = {Dariush Salami and Stephan Sigg},

booktitle = {IEEE 31st International Workshop on Machine Learning for Signal Processing (MLSP)},

title = {Zero-shot Motion Pattern Recognition from 4D Point Clouds},

year = {2021},

project ={radiosense,windmill},

group={ambience}

}

Privacy in Speech Communication Technology.

Bäckström, T.; Zarazaga, P. P.; Das, S.; and Sigg, S.

In Fonetiikan päivät-Phonetics Symposium, 2021.

link bibtex

link bibtex

@inproceedings{backstrom2021privacy,

title={Privacy in Speech Communication Technology},

author={B{\"a}ckstr{\"o}m, Tom and Zarazaga, Pablo Perez and Das, Sneha and Sigg, Stephan},

booktitle={Fonetiikan p{\"a}iv{\"a}t-Phonetics Symposium},

year={2021},

group={ambience}

}

Intuitive Privacy from Acoustic Reach: A Case for Networked Voice User-Interfaces.

Bäckström, T.; Das, S.; Zarazaga, P. P.; Fischer, J.; Findling, R. D.; Sigg, S.; and Nguyen, L. N.

In Proc. 2021 ISCA Symposium on Security and Privacy in Speech Communication, pages 57–61, 2021.

link bibtex

link bibtex

@inproceedings{backstrom2021intuitive,

title={Intuitive Privacy from Acoustic Reach: A Case for Networked Voice User-Interfaces},

author={B{\"a}ckstr{\"o}m, Tom and Das, Sneha and Zarazaga, Pablo P{\'e}rez and Fischer, Johannes and Findling, Rainhard Dieter and Sigg, Stephan and Nguyen, Le Ngu},

booktitle={Proc. 2021 ISCA Symposium on Security and Privacy in Speech Communication},

pages={57--61},

year={2021},

group={ambience}

}

Adversary Models for Mobile Device Authentication.

Mayrhofer, R.; and Sigg, S.

ACM Computing Surveys,1-33. 2021.

doi link bibtex abstract 1 download

doi link bibtex abstract 1 download

@article{Mayrhofer_2021_ACMCS,

author={Rene Mayrhofer and Stephan Sigg},

journal={ACM Computing Surveys},

title={Adversary Models for Mobile Device Authentication},

year={2021},

abstract={Mobile device authentication has been a highly active research topic for over 10 years, with a vast range of methods proposed and analyzed.

In related areas, such as secure channel protocols, remote authentication, or desktop user authentication, strong, systematic, and increasingly formal threat models have been established and are used to qualitatively compare different methods.

However, the analysis of mobile device authentication is often based on weak adversary models, suggesting overly optimistic results on their respective security.

In this article, we introduce a new classification of adversaries to better analyze and compare mobile device authentication methods. We apply this classification to a systematic literature survey. The survey shows that security is still an afterthought and that most proposed protocols lack a comprehensive security analysis.

The proposed classification of adversaries provides a strong and practical adversary model that offers a comparable and transparent classification of security properties in mobile device authentication.

},

issue_date = {to appear},

publisher = {ACM},

volume = { },

number = { },

pages = {1-33},

group = {ambience},

doi = {10.1145/3477601}

}

Mobile device authentication has been a highly active research topic for over 10 years, with a vast range of methods proposed and analyzed. In related areas, such as secure channel protocols, remote authentication, or desktop user authentication, strong, systematic, and increasingly formal threat models have been established and are used to qualitatively compare different methods. However, the analysis of mobile device authentication is often based on weak adversary models, suggesting overly optimistic results on their respective security. In this article, we introduce a new classification of adversaries to better analyze and compare mobile device authentication methods. We apply this classification to a systematic literature survey. The survey shows that security is still an afterthought and that most proposed protocols lack a comprehensive security analysis. The proposed classification of adversaries provides a strong and practical adversary model that offers a comparable and transparent classification of security properties in mobile device authentication.

Camouflage learning. Feature value obscuring ambient intelligence for constrained devices.

Nguyen, L. N.; Sigg, S.; Lietzen, J.; Findling, R. D.; and Ruttik, K.

IEEE Transactions on Mobile Computing,1-17. 2021.

link bibtex abstract

link bibtex abstract

@article{Le_2021_TMC,

author={Le Ngu Nguyen and Stephan Sigg and Jari Lietzen and Rainhard Dieter Findling and Kalle Ruttik},

journal={IEEE Transactions on Mobile Computing},

title={Camouflage learning. Feature value obscuring ambient intelligence for constrained devices},

year={2021},

abstract={Ambient intelligence demands collaboration schemes for distributed constrained devices which are not only highly energy efficient in distributed sensing, processing and communication, but which also respect data privacy. Traditional algorithms for distributed processing suffer in Ambient intelligence domains either from limited data privacy, or from their excessive processing demands for constrained distributed devices.

In this paper, we present Camouflage learning, a distributed machine learning scheme that obscures the trained model via probabilistic collaboration using physical-layer computation offloading and demonstrate the feasibility of the approach on backscatter communication prototypes and in comparison with Federated learning. We show that Camouflage learning is more energy efficient than traditional schemes and that it requires less communication overhead while reducing the computation load through physical-layer computation offloading. The scheme is synchronization-agnostic and thus appropriate for sharply constrained, synchronization-incapable devices. We demonstrate model training and inference on four distinct datasets and investigate the performance of the scheme with respect to communication range, impact of challenging communication environments, power consumption, and the backscatter hardware prototype.

},

issue_date = {July 2021},

publisher = {IEEE},

volume = { },

number = { },

pages = {1-17},

group = {ambience},

project = {abacus}

}

Ambient intelligence demands collaboration schemes for distributed constrained devices which are not only highly energy efficient in distributed sensing, processing and communication, but which also respect data privacy. Traditional algorithms for distributed processing suffer in Ambient intelligence domains either from limited data privacy, or from their excessive processing demands for constrained distributed devices. In this paper, we present Camouflage learning, a distributed machine learning scheme that obscures the trained model via probabilistic collaboration using physical-layer computation offloading and demonstrate the feasibility of the approach on backscatter communication prototypes and in comparison with Federated learning. We show that Camouflage learning is more energy efficient than traditional schemes and that it requires less communication overhead while reducing the computation load through physical-layer computation offloading. The scheme is synchronization-agnostic and thus appropriate for sharply constrained, synchronization-incapable devices. We demonstrate model training and inference on four distinct datasets and investigate the performance of the scheme with respect to communication range, impact of challenging communication environments, power consumption, and the backscatter hardware prototype.

BCG and ECG-based secure communication for medical devices in Body Area Networks.

Beck, N.; Zuo, S.; and Sigg, S.

In The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct, 2021.

link bibtex abstract

link bibtex abstract

@inproceedings{Beck2020BCGECG,

title={BCG and ECG-based secure communication for medical devices in Body Area Networks},

author={Nils Beck and Si Zuo and Stephan Sigg},

booktitle={The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct},

year={2021},

abstract={An increasing amount of medical devices, such as pace makers or insulin pumps, is able to communicate in wireless Body Area Networks (BANs). While this facilitates interaction between users and medical devices, something that was previously more complicated or - in the case of implanted devices - often impossible, it also raises security and privacy questions. We exploit the wide availability of ballistocardiographs (BCG) and electocardiographs (ECG) in consumer wearables and propose MEDISCOM, an ad-hoc, implicit and secure communication protocol for medical devices in local BANs. Deriving common secret keys from a body’s BCG or ECG signal. MEDISCOM ensures confidentiality and integrity of sensitive medical data and also continuously authenticates devices, requiring no explicit user interaction and maintaining a low computational overhead. We consider relevant attack vectors and show how MEDISCOM is resilient towards them. Furthermore, we validate the security of the secret keys that our protocol derives on BCG and ECG data from 29 subjects.

},

group = {ambience},

project = {abacus}

}

An increasing amount of medical devices, such as pace makers or insulin pumps, is able to communicate in wireless Body Area Networks (BANs). While this facilitates interaction between users and medical devices, something that was previously more complicated or - in the case of implanted devices - often impossible, it also raises security and privacy questions. We exploit the wide availability of ballistocardiographs (BCG) and electocardiographs (ECG) in consumer wearables and propose MEDISCOM, an ad-hoc, implicit and secure communication protocol for medical devices in local BANs. Deriving common secret keys from a body’s BCG or ECG signal. MEDISCOM ensures confidentiality and integrity of sensitive medical data and also continuously authenticates devices, requiring no explicit user interaction and maintaining a low computational overhead. We consider relevant attack vectors and show how MEDISCOM is resilient towards them. Furthermore, we validate the security of the secret keys that our protocol derives on BCG and ECG data from 29 subjects.

Camouflage Learning.

Sigg, S.; Nguyen, L. N.; and Ma, J.

In The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct, 2021.

link bibtex abstract

link bibtex abstract

@inproceedings{Sigg2020Camouflage,

title={Camouflage Learning},

author={Stephan Sigg and Le Ngu Nguyen and Jing Ma},

booktitle={The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct},

year={2021},

abstract={Federated learning has been proposed as a concept for distributed machine learning which enforces privacy by avoiding sharing private data with a coordinator or distributed nodes. Instead of gathering datasets to a central server for model training in traditional machine learning, in federated learning, model updates are computed locally at distributed devices and merged at a coordinator. However, information on local data might be leaked through the model updates. We propose Camouflage learning, a distributed machine learning scheme that distributes both the data and the model. Neither the distributed devices nor the coordinator is at any point in time in possession of the complete model. Furthermore, data and model are obfuscated during distributed model inference and distributed model training. Camouflage learning can be implemented with various Machine learning schemes.

},

group = {ambience},

project = {radiosense, abacus}

}

Federated learning has been proposed as a concept for distributed machine learning which enforces privacy by avoiding sharing private data with a coordinator or distributed nodes. Instead of gathering datasets to a central server for model training in traditional machine learning, in federated learning, model updates are computed locally at distributed devices and merged at a coordinator. However, information on local data might be leaked through the model updates. We propose Camouflage learning, a distributed machine learning scheme that distributes both the data and the model. Neither the distributed devices nor the coordinator is at any point in time in possession of the complete model. Furthermore, data and model are obfuscated during distributed model inference and distributed model training. Camouflage learning can be implemented with various Machine learning schemes.

Towards battery-less RF sensing.

Kodali, M.; Nguyen, L. N.; and Sigg, S.

In The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), WiP, 2021.

link bibtex abstract

link bibtex abstract

@inproceedings{Manila2020BatteryLess,

title={Towards battery-less RF sensing},

author={Manila Kodali and Le Ngu Nguyen and Stephan Sigg},

booktitle={The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), WiP},

year={2021},

abstract={Recent work has demonstrated the use of the radio interface as a sensing modality for gestures, activities and situational perception. The field generally moves towards larger bandwidths, multiple antennas, and higher, mmWave frequency domains, which allow for the recognition of minute movements. We envision another set of applications for RF sensing: battery-less autonomous sensing devices. In this work, we investigate transceiver-less passive RF-sensors which are excited by the fluctuation of the received power over the wireless channel. In particular, we demonstrate the use of battery-less RF-sensing for applications of on-body gesture recognition integrated into smart garment, as well as the integration of such sensing capabilities into smart surfaces.

},

group = {ambience},

project = {radiosense,abacus}

}

Recent work has demonstrated the use of the radio interface as a sensing modality for gestures, activities and situational perception. The field generally moves towards larger bandwidths, multiple antennas, and higher, mmWave frequency domains, which allow for the recognition of minute movements. We envision another set of applications for RF sensing: battery-less autonomous sensing devices. In this work, we investigate transceiver-less passive RF-sensors which are excited by the fluctuation of the received power over the wireless channel. In particular, we demonstrate the use of battery-less RF-sensing for applications of on-body gesture recognition integrated into smart garment, as well as the integration of such sensing capabilities into smart surfaces.

Pantomime: Mid-Air Gesture Recognition with Sparse Millimeter-Wave Radar Point Clouds.

Palipana, S.; Salami, D.; Leiva, L.; and Sigg, S.

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), 5(1): 1-27. 2021.

link bibtex abstract

link bibtex abstract

@article{Sameera_2021_IMWUT,

author={Sameera Palipana and Dariush Salami and Luis Leiva and Stephan Sigg},

journal={Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT)},

title={Pantomime: Mid-Air Gesture Recognition with Sparse Millimeter-Wave Radar Point Clouds},

year={2021},

abstract={We introduce Pantomime, a novel mid-air gesture recognition system exploiting spatio-temporal properties of millimeter-wave radio frequency (RF) signals. Pantomime is positioned in a unique region of the RF landscape: mid-resolution mid-range high-frequency sensing, which makes it ideal for motion gesture interaction. We configure a commercial frequency-modulated continuous-wave radar device to promote spatial information over temporal resolution by means of sparse 3D point clouds, and contribute a deep learning architecture that directly consumes the point cloud, enabling real-time performance with low computational demands. Pantomime achieves 95\% accuracy and 99\% AUC in a challenging set of 21 gestures articulated by 45 participants in two indoor environments, outperforming four state-of-the-art 3D point cloud recognizers. We also analyze the effect of environment, articulation speed, angle, and distance to the sensor. We conclude that Pantomime is resilient to various input conditions and that it may enable novel applications in industrial, vehicular, and smart home scenarios.

},

issue_date = {March 2021},

publisher = {ACM New York, NY, USA},

volume = {5},

number = {1},

pages = {1-27},

group = {ambience},

project = {radiosense,windmill}

}

We introduce Pantomime, a novel mid-air gesture recognition system exploiting spatio-temporal properties of millimeter-wave radio frequency (RF) signals. Pantomime is positioned in a unique region of the RF landscape: mid-resolution mid-range high-frequency sensing, which makes it ideal for motion gesture interaction. We configure a commercial frequency-modulated continuous-wave radar device to promote spatial information over temporal resolution by means of sparse 3D point clouds, and contribute a deep learning architecture that directly consumes the point cloud, enabling real-time performance with low computational demands. Pantomime achieves 95% accuracy and 99% AUC in a challenging set of 21 gestures articulated by 45 participants in two indoor environments, outperforming four state-of-the-art 3D point cloud recognizers. We also analyze the effect of environment, articulation speed, angle, and distance to the sensor. We conclude that Pantomime is resilient to various input conditions and that it may enable novel applications in industrial, vehicular, and smart home scenarios.

Sharing geotagged pictures for an Emotion-based Recommender System.

Hitz, A.; Naas, S.; and Sigg, S.

In The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct, 2021.

link bibtex abstract

link bibtex abstract

@inproceedings{hitz2020Emotion,

title={Sharing geotagged pictures for an Emotion-based Recommender System},

author={Andreas Hitz and Si-Ahmed Naas and Stephan Sigg},

booktitle={The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct},

year={2021},

abstract={Recommender systems are prominently used for movie or app recommendation or in e-commerce by considering profiles, past preferences and increasingly also further personalized measures. We designed and implemented an emotion-based recommender system for city visitors that takes into account user emotion and user location for the recommendation process. We conducted a comparative study between the emotion-based recommender system and recommender systems based on traditional measures. Our evaluation study involved 28 participators and the experiments showed that the emotion-based recommender system increased the average rating of the recommendation by almost 19%. We conclude that the use of emotion can significantly improve the results and especially their level of personalization.

},

group = {ambience}

}

%%% 2020 %%%

Recommender systems are prominently used for movie or app recommendation or in e-commerce by considering profiles, past preferences and increasingly also further personalized measures. We designed and implemented an emotion-based recommender system for city visitors that takes into account user emotion and user location for the recommendation process. We conducted a comparative study between the emotion-based recommender system and recommender systems based on traditional measures. Our evaluation study involved 28 participators and the experiments showed that the emotion-based recommender system increased the average rating of the recommendation by almost 19%. We conclude that the use of emotion can significantly improve the results and especially their level of personalization.

2020

(16)

A Global Brain fuelled by Local intelligence: Optimizing Mobile Services and Networks with AI.

Naas, S.; Mohammed, T.; and Sigg, S.

In 2020 16th International Conference on Mobility, Sensing and Networking (MSN), pages 23–32, 2020. IEEE

link bibtex

link bibtex

@inproceedings{naas2020global,

title={A Global Brain fuelled by Local intelligence: Optimizing Mobile Services and Networks with AI},

author={Naas, Si-Ahmed and Mohammed, Thaha and Sigg, Stephan},

booktitle={2020 16th International Conference on Mobility, Sensing and Networking (MSN)},

pages={23--32},

year={2020},

organization={IEEE},

group={ambience}

}

Functional Gaze Prediction in Egocentric Video.

Naas, S. A.; Jiang, X.; Sigg, S.; and Ji, Y.

In 18th International Conference on Advances in Mobile Computing and Multimedia (MoMM2020), 2020.

link bibtex

link bibtex

@inproceedings{Ahmed2020gaze,

title={Functional Gaze Prediction in Egocentric Video},

author={Si Ahmed Naas and Xiaolan Jiang and Stephan Sigg and Yusheng Ji},

booktitle={18th International Conference on Advances in Mobile Computing and Multimedia (MoMM2020)},

year={2020},

group = {ambience}

}

Acoustic Fingerprints for Access Management in Ad-Hoc Sensor Networks.

Zarazaga, P. P.; Bäckström, T.; and Sigg, S.

IEEE Access. 2020.

doi link bibtex abstract

doi link bibtex abstract

@article{Pablo_2020_Acoustic,

author={Pablo Pérez Zarazaga and Tom B\"ackstr\"om and Stephan Sigg},

journal={IEEE Access},

title={Acoustic Fingerprints for Access Management in Ad-Hoc Sensor Networks},

year={2020},

abstract={Voice user interfaces can offer intuitive interaction with our devices, but the usability and audio quality could be further improved if multiple devices could collaborate to provide a distributed voice user interface. To ensure that users' voices are not shared with unauthorized devices, it is however necessary to design an access management system that adapts to the users' needs. Prior work has demonstrated that a combination of audio fingerprinting and fuzzy cryptography yields a robust pairing of devices without sharing the information that they record. However, the robustness of these systems is partially based on the extensive duration of the recordings that are required to obtain the fingerprint. This paper analyzes methods for robust generation of acoustic fingerprints in short periods of time to enable the responsive pairing of devices according to changes in the acoustic scenery and can be integrated into other typical speech processing tools.},

group = {ambience},

doi = {10.1109/ACCESS.2020.3022618}

}

Voice user interfaces can offer intuitive interaction with our devices, but the usability and audio quality could be further improved if multiple devices could collaborate to provide a distributed voice user interface. To ensure that users' voices are not shared with unauthorized devices, it is however necessary to design an access management system that adapts to the users' needs. Prior work has demonstrated that a combination of audio fingerprinting and fuzzy cryptography yields a robust pairing of devices without sharing the information that they record. However, the robustness of these systems is partially based on the extensive duration of the recordings that are required to obtain the fingerprint. This paper analyzes methods for robust generation of acoustic fingerprints in short periods of time to enable the responsive pairing of devices according to changes in the acoustic scenery and can be integrated into other typical speech processing tools.

Analysing Ballistocardiography for Pervasive Healthcare.

Hytönen, R.; Tshala, A.; Schreier, J.; Holopainen, M.; Forsman, A.; Oksanen, M.; Findling, R.; Nguyen, L. N.; Jähne-Raden, N.; and Sigg, S.

In 16th International Conference on Mobility, Sensing and Networking (MSN 2020) , 2020.

link bibtex

link bibtex

@inproceedings{hytonen2020BCG,

title={Analysing Ballistocardiography for Pervasive Healthcare},

author={Roni Hytönen and Alison Tshala and Jan Schreier and Melissa Holopainen and Aada Forsman and Minna Oksanen and Rainhard Findling and Le Ngu Nguyen and Nico Jähne-Raden and Stephan Sigg},

booktitle={16th International Conference on Mobility, Sensing and Networking (MSN 2020) },

year={2020},

group = {ambience}

}

A Global Brain fuelled by Local intelligence Optimizing Mobile Services and Networks with AI.

Naas, S. A.; Mohammed, T.; and Sigg, S.

In 16th International Conference on Mobility, Sensing and Networking (MSN 2020) , 2020.

link bibtex

link bibtex

@inproceedings{naas2020GlobalBrain,

title={A Global Brain fuelled by Local intelligence Optimizing Mobile Services and Networks with AI},

author={Si Ahmed Naas and Thaha Mohammed and Stephan Sigg},

booktitle={16th International Conference on Mobility, Sensing and Networking (MSN 2020) },

year={2020},

group = {ambience}

}

A FAIR Extension for the MQTT Protocol.

Salami, D.; Streibel, O.; and Sigg, S.

In 16th International Conference on Mobility, Sensing and Networking (MSN 2020) , 2020.

link bibtex

link bibtex

@inproceedings{salami2020MQTT,

title={A FAIR Extension for the MQTT Protocol},

author={Dariush Salami and Olga Streibel and Stephan Sigg},

booktitle={16th International Conference on Mobility, Sensing and Networking (MSN 2020) },

year={2020},

group = {ambience},

project={abacus}

}

Real-time Emotion Recognition for Sales.

Naas, S. A.; and Sigg, S.

In 16th International Conference on Mobility, Sensing and Networking (MSN 2020) , 2020.

link bibtex

link bibtex

@inproceedings{naas2020RealTime,

title={Real-time Emotion Recognition for Sales},

author={Si Ahmed Naas and Stephan Sigg},

booktitle={16th International Conference on Mobility, Sensing and Networking (MSN 2020) },

year={2020},

group = {ambience}

}

A Multisensory Edge-Cloud Platform for Opportunistic Sensing in Cobot Environments.

Kianoush, S.; Savazzi, S.; Beschi, M.; Sigg, S.; and Rampa, V.

IEEE Internet of Things Journal. 2020.

doi link bibtex

doi link bibtex

@article{Sanaz_2020_IoT,

author={Sanaz Kianoush and Stefano Savazzi and Manuel Beschi and Stephan Sigg and Vittorio Rampa},

journal={IEEE Internet of Things Journal},

title={A Multisensory Edge-Cloud Platform for Opportunistic Sensing in Cobot Environments},

year={2020},

doi = {10.1109/JIOT.2020.3011809},

project={radiosense},

group = {ambience}

}

SVP: Sinusoidal Viewport Predictionfor 360-Degree Video Streaming.

Jiang, X.; Naas, S. A.; Chiang, Y.; Sigg, S.; and Ji, Y.

IEEE Access, 8. 2020.

doi link bibtex abstract

doi link bibtex abstract

@article{Chiang_2020_Viewpoint,

author={Xiaolan Jiang and Si Ahmed Naas and Yi-Han Chiang and Stephan Sigg and Yusheng Ji},

journal={IEEE Access},

title={SVP: Sinusoidal Viewport Predictionfor 360-Degree Video Streaming},

year={2020},