Muneeba Raja

- Aalto University

- Maarintie 8

- 00076 Espoo

- Finland

- muneeba.raja@aalto.fi

My research interests cover RF-based device-free activity recognition, in particular sentiment-indicators. The objective is to exploit the capabilities of RF-signals, say Wi-Fi to detect and learn human behaviour, emotions and attention levels. Radio signals are ubiquitous, cheap, easily deployable and less privacy intrusive as compared to video cameras and physiological sensors.

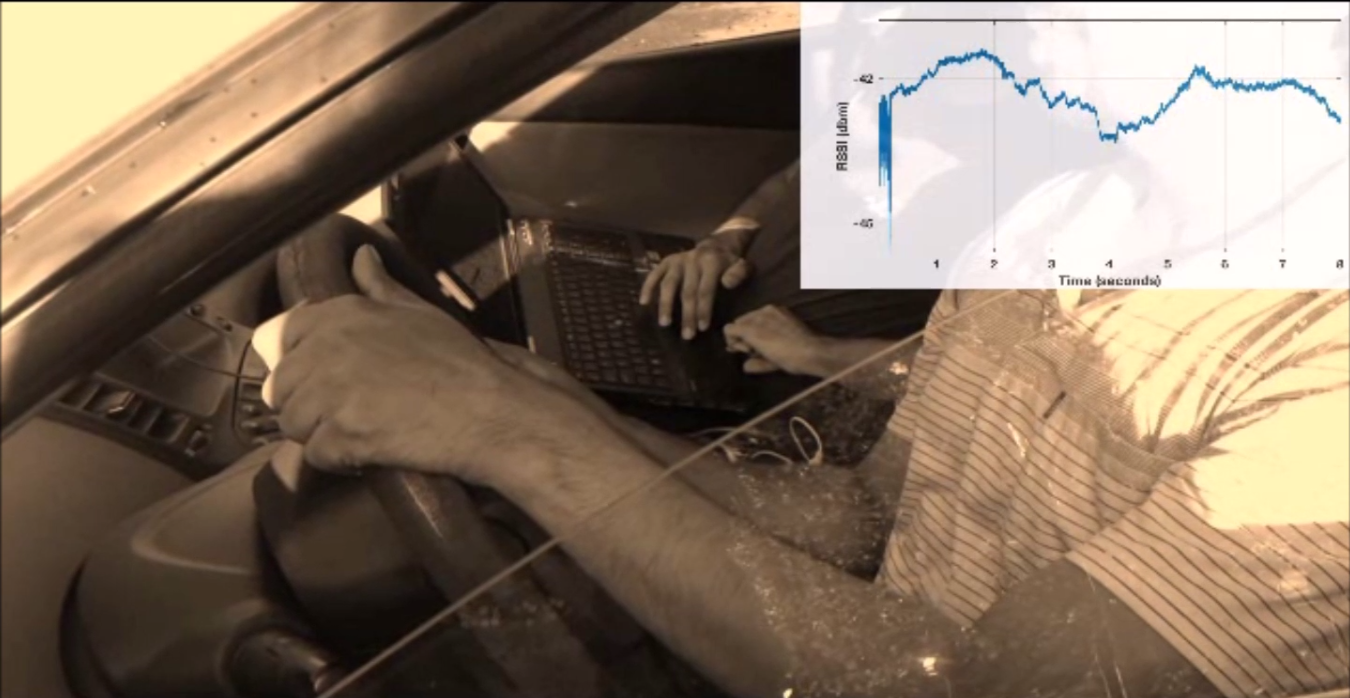

Currently, I am using this approach to find the attention levels of a driver in car (autonomous and non-autonomous). This is done by detecting activities, body (arm and head) movements captured by Wi-Fi Signals. Finding head orientations (frequency, direction and duration) gives information about the state of the driver, e.g., if he is lost, found some interesting landmarks etc. The activities with arms and hands, for example, texting, eating, drinking, fetching, using multimedia box also gives information about how an individual driver behaves while in car. This information can be used to either give warnings/feedback depending on his state. In highly autonomous vehicles, where driver is expected to utilise his time by doing everyday tasks while in car, this information can help learn routines, likes, dislikes of the driver and providing him better services. Moreover, particular gestures detection can be used as a remote control feature for accessories in car.

Currently Wi-Fi is already available in modern cars, using video cameras to obtain this information would be highly intrusive and the processing of image data would be computationally expensive.

The human study for this use case has been carried out for 45 participants at BMW research centre, Munich. Now the challenge of accurately distinguishing between arm movements, head movements, interesting activities and gestures is being carried out. The results will be published in upcoming conferences.

<script src="https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Raja&jsonp=1"></script>

<?php

$contents = file_get_contents("https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Raja");

print_r($contents);

?>

<iframe src="https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Raja"></iframe>

For more details see the documention.

To the site owner:

Action required! Mendeley is changing its API. In order to keep using Mendeley with BibBase past April 14th, you need to:

- renew the authorization for BibBase on Mendeley, and

- update the BibBase URL in your page the same way you did when you initially set up this page.

![paper Toward Complex 3D Movement Detection to Analyze Human Behavior via Radio-Frequency Signals [link]](http://bibbase.org/img/filetypes/link.svg) paper

link

bibtex

abstract

8 downloads

paper

link

bibtex

abstract

8 downloads

@PhDThesis{MuneebaThesis2020,

author = "Muneeba Raja",

title = "Toward Complex 3D Movement Detection to Analyze Human Behavior via Radio-Frequency Signals",

school = "Aalto University",

year = "2020",

month = "September",

isbn = "978-952-60-3988-6",

url_Paper ={https://aaltodoc.aalto.fi/handle/123456789/46311},

abstract = {A driver's attention, parallel actions, and emotions directly influence driving behavior. Any secondary task, be it cognitive, visual, or manual, that diverts driver focus from the primary task of driving is a source of distraction. Longer response time, inability to scan the road, and missing visual cues can all lead to car crashes with serious consequences. Current research focuses on detecting distraction by means of vehicle-mounted video cameras or wearable sensors for tracking eye movements and head rotation. Facial expressions, speech, and physiological signals are also among the widely used indicators for detecting distraction. These approaches are accurate, fast, and reliable but come with a high installation cost, requirements related to lighting conditions, privacy intrusions, and energy consumption.

Over the past decade, the use of radio signals has been investigated as a possible solution for the aforementioned limitations of today's technologies. Changes in radio-signal patterns caused by movements of the human body can be analyzed and thereby used in detecting humans' gestures and activities. Human behavior and emotions, in particular, are less explored in this regard and are addressed mostly with reference to physiological signals.

The thesis exploited multiple wireless technologies (1.8~GHz, WiFi, and millimeter wave) and combinations thereof to detect complex 3D movements of a driver in a car. Upper-body movements are vital indicators of a driver's behavior in a car, and the information from these movements could be used to generate appropriate feedback, such as warnings or provision of directives for actions that would avoid jeopardizing safety. Existing wireless-system-based solutions focus primarily on either large or small movements, or they address well-defined activities. They do not consider discriminating large movements from small ones, let alone their directions, within a single system. These limitations underscore the requirement to address complex natural-behavior situations precisely such as that in a car, which demands not only isolating particular movements but also classifying and predicting them.

The research to reach the attendant goals exploited physical properties of RF signals, several hardware-software combinations, and building of algorithms to process and detect body movements -- from the simple to the complex. Additionally, distinctive feature sets were addressed for machine-learning techniques to find patterns in data and predict states accordingly. The systems were evaluated by performing extensive real-world studies.},

group = {ambience},

project = {radiosense}}

doi link bibtex

@article{Muneeba_2020_3D,

author={ Muneeba Raja and Zahra Vali and Sameera Palipana and David G. Michelson and Stephan Sigg },

journal={IEEE Access},

title={3D head motion detection using millimeter-wave Doppler radar},

year={2020},

doi = {10.1109/ACCESS.2020.2973957},

project={radiosense},

group = {ambience}

}

doi link bibtex abstract

@article{Raja_2019_antenna,

author={Muneeba Raja and Aidan Hughes and Yixuan Xu and Parham zarei and David G. Michelson and Stephan Sigg},

journal={IEEE Antennas and Wireless Propagation letters},

title={Wireless Multi-frequency Feature Set to Simplify Human 3D Pose Estimation},

year={2019},

volume={18},

number={5},

pages={876-880},

doi = {10.1109/LAWP.2019.2904580},

abstract = {We present a multifrequency feature set to detect driver's three-dimensional (3-D) head and torso movements from fluctuations in the radio frequency channel due to body movements. Current features used for movement detection are based on the time-of-flight, received signal strength, and channel state information and come with the limitations of coarse tracking, sensitivity toward multipath effects, and handling corrupted phase data, respectively. There is no standalone feature set that accurately detects small and large movements and determines the direction in 3-D space. We resolve this problem by using two radio signals at widely separated frequencies in a monostatic configuration. By combining information about displacement, velocity, and direction of movements derived from the Doppler effect at each frequency, we expand the number of existing features. We separate pitch, roll, and yaw movements of head from torso and arm. The extracted feature set is used to train a K-Nearest Neighbor classification algorithm, which could provide behavioral awareness to cars while being less invasive as compared to camera-based systems. The training results on data from four participants reveal that the classification accuracy is 77.4% at 1.8 GHz, it is 87.4% at 30 GHz, and multifrequency feature set improves the accuracy to 92%.},

project = {radiosense},

group = {ambience}}

doi link bibtex

@article{Kianoush_2018_IoTJ,

author={Sanaz Kianoush and Muneeba Raja and Stefano Savazzi and Stephan Sigg},

journal={IEEE Internet of Things Journal},

title={A cloud-IoT platform for passive radio sensing: challenges and application case studies},

year={2018},

doi={10.1109/JIOT.2018.2834530},

group = {ambience}}

link bibtex

@InProceedings{Raja_2018_icdcs,

author={Muneeba Raja and Viviane Ghaderi and Stephan Sigg},

title={WiBot! In-Vehicle Behaviour and Gesture Recognition Using Wireless Network Edge},

booktitle={38th IEEE International Conference on Distributed Computing Systems (ICDCS 2018)},

year={2018},

group = {ambience}

}

link bibtex

@InProceedings{Raja_2018_vtc,

author={Muneeba Raja and Viviane Ghaderi and Stephan Sigg},

title={Detecting Driver's Disracted Behaviour from Wi-Fi},

booktitle={Vehicular Technology Conference (vtc 2018-spring)},

year={2018},

group = {ambience}}

![Paper Towards pervasive geospatial affect perception [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

2 downloads

Paper

doi

link

bibtex

abstract

2 downloads

@ARTICLE{Muneeba_2017_Geospatial,

author={Muneeba Raja and Anja Exler and Samuli Hemminki and ShinIchi Konomi and Stephan Sigg and Sozo Inoue},

journal={Springer GeoInformatica},

title={Towards pervasive geospatial affect perception},

year={2017},

abstract = {Due to the enormous penetration of connected computing devices with diverse sensing and localization capabilities, a good fraction of an individual’s activities, locations, and social connections can be sensed and spatially pinpointed. We see significant potential to advance the field of personal activity sensing and tracking beyond its current state of simple activities, at the same time linking activities geospatially. We investigate the detection of sentiment from environmental, on-body and smartphone sensors and propose an affect map as an interface to accumulate and interpret data about emotion and mood from diverse set of sensing sources. In this paper, we first survey existing work on affect sensing and geospatial systems, before presenting a taxonomy of large-scale affect sensing. We discuss model relationships among human emotions and geo-spaces using networks, apply clustering algorithms to the networks and visualize clusters on a map considering space, time and mobility. For the recognition of emotion and mood, we report from two stud-

ies exploiting environmental and on-body sensors. Thereafter, we propose a framework for large-scale affect sensing and discuss challenges and open issues for future work.

},

doi={10.1007/s10707-017-0294-1},

url={http://dx.doi.org/10.1007/s10707-017-0294-1},

keywords={Geospatial mapping, Emotion recognition, Device-free sensing, Activity recognition},

group = {ambience}}

link bibtex

@INPROCEEDINGS{Muneeba_2017_ETFA,

author={Muneeba Raja and Stephan Sigg},

booktitle={22nd IEEE International Conference on Emerging Technologies And Factory Automation (ETFA'17)},

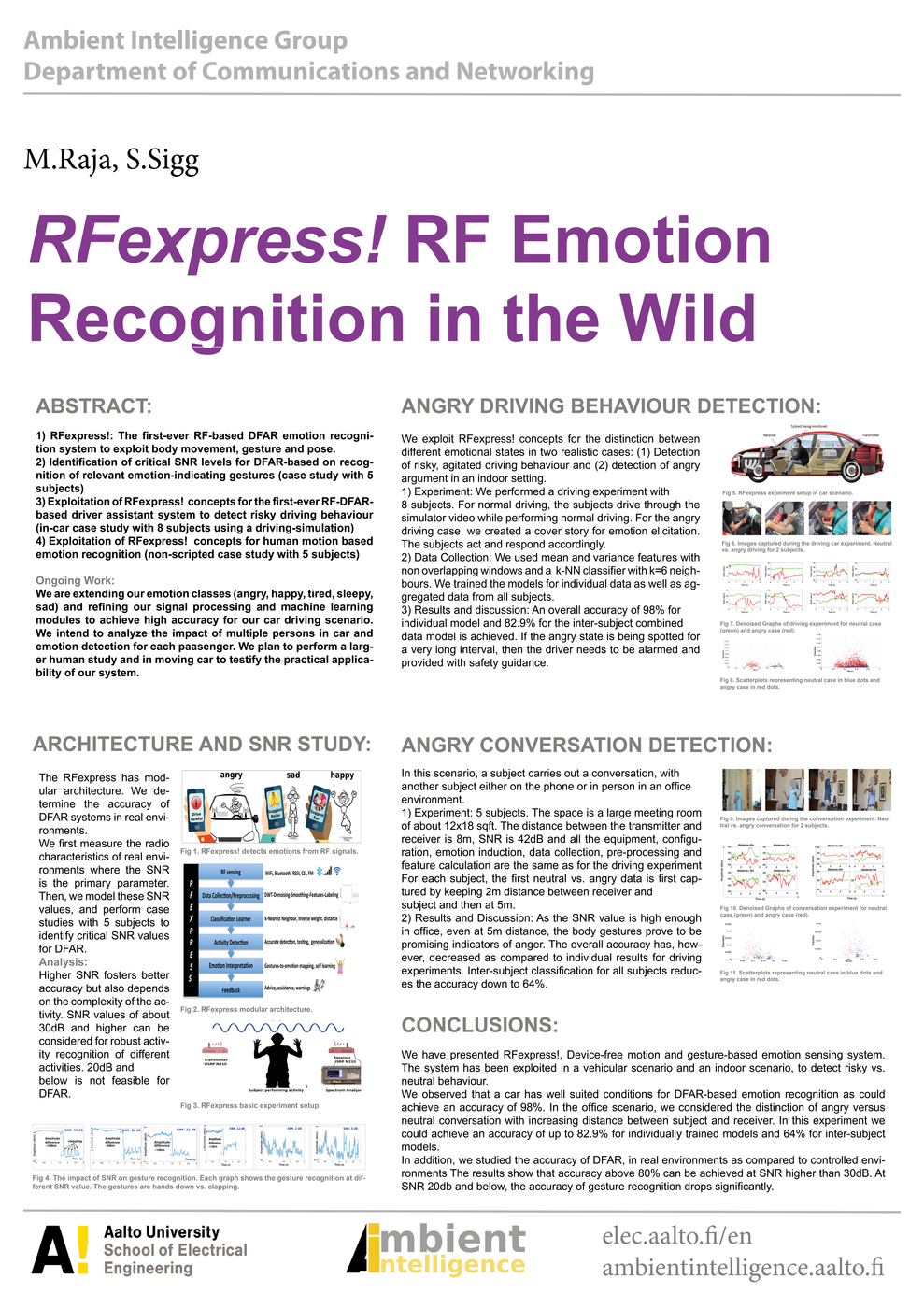

title={RFexpress! – Exploiting the wireless network edge for RF-based emotion sensing},

year={2017},

group = {ambience}}

link bibtex abstract

@INPROCEEDINGS{MuneebaWiP_2017_PerCom,

author={Muneeba Raja and Stephan Sigg},

booktitle={2017 IEEE International Conference on Pervasive Computing and Communication (WiP)},

title={RFexpress! - RF Emotion Recognition in the Wild},

year={2017},

abstract={We present RFexpress! the first-ever system to recognize emotion from body movements and gestures via DeviceFree Activity Recognition (DFAR). We focus on the distinction between neutral and agitated states in realistic environments. In particular, the system is able to detect risky driving behaviour in a vehicular setting as well as spotting angry conversations in an indoor environment. In case studies with 8 and 5 subjects the system could achieve recognition accuracies of 82.9% and 64%. We study the effectiveness of DFAR emotion and activity recognition systems in real environments such as cafes, malls, outdoor and office spaces. We measure radio characteristics in these environments at different days and times and analyse the impact of variations in the Signal to Noise Ratio (SNR) on the accuracy of DFAR emotion and activity recognition. In a case study with 5 subjects, we then find critical SNR values under which activity and emotion recognition results are no longer reliable.

},

%doi={10.1109/PERCOMW.2016.7457119},

month={March},

group = {ambience}}

doi link bibtex abstract

@INPROCEEDINGS{Raja_2016_CoSDEO,

author={Muneeba Raja and Stephan Sigg},

booktitle={2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops)},

title={Applicability of RF-based methods for emotion recognition: A survey},

year={2016},

pages={1-6},

abstract={Human emotion recognition has attracted a lot of research in recent years. However, conventional methods for sensing human emotions are either expensive or privacy intrusive. In this paper, we explore a connection between emotion recognition and RF-based activity recognition that can lead to a novel ubiquitous emotion sensing technology. We discuss the latest literature from both domains, highlight the potential of body movements for accurate emotion detection and focus on how emotion recognition could be done using inexpensive, less privacy intrusive, device-free RF sensing methods. Applications include environment and crowd behaviour tracking in real time, assisted living, health monitoring, or also domestic appliance control. As a result of this survey, we propose RF-based device free recognition for emotion detection based on body movements. However, it requires overcoming challenges, such as accuracy, to outperform classical methods.},

keywords={Emotion recognition;Feature extraction;Mood;Sensors;Speech;Speech recognition;Tracking},

doi={10.1109/PERCOMW.2016.7457119},

month={March},

group = {ambience}}

Beamsteering for Training-free Counting of

Multiple Humans Performing Distinct Activities

Beamsteering for Training-free Counting of

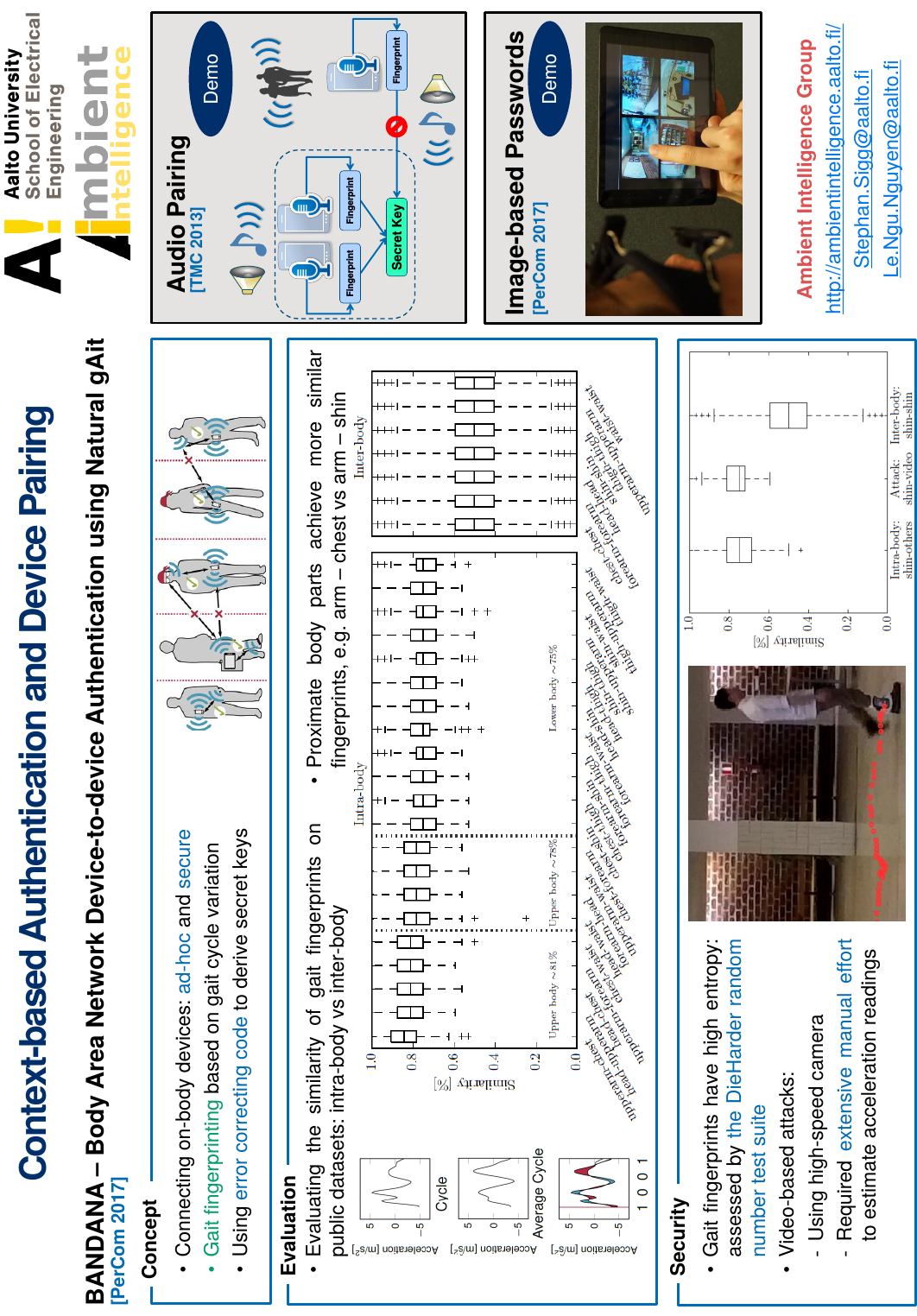

Multiple Humans Performing Distinct Activities RFexpress! - RF Emotion Recognition in the Wild

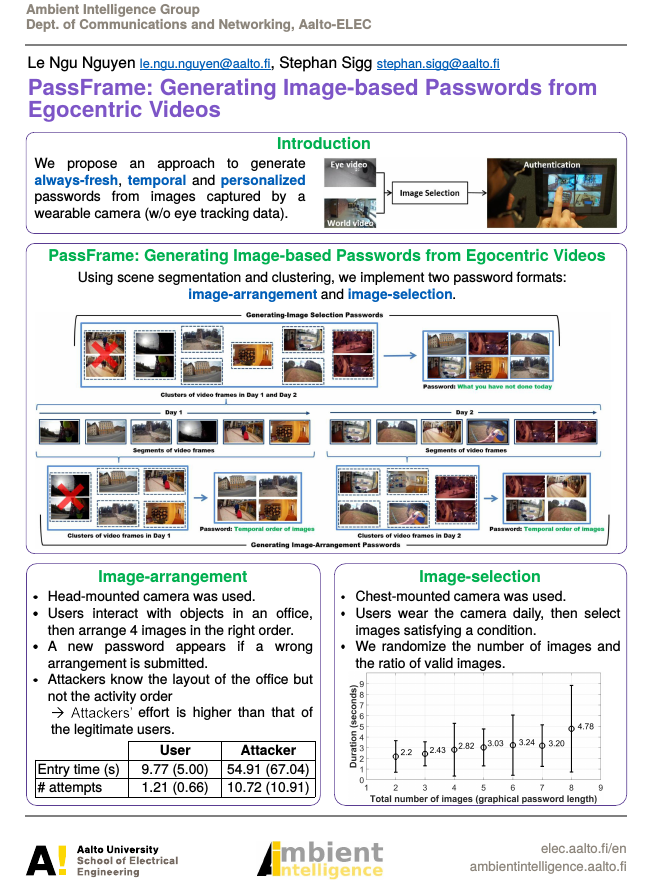

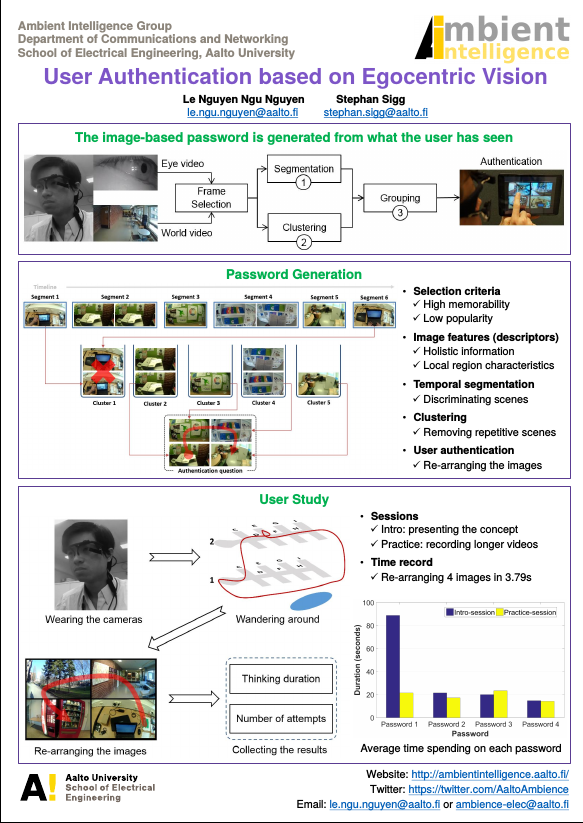

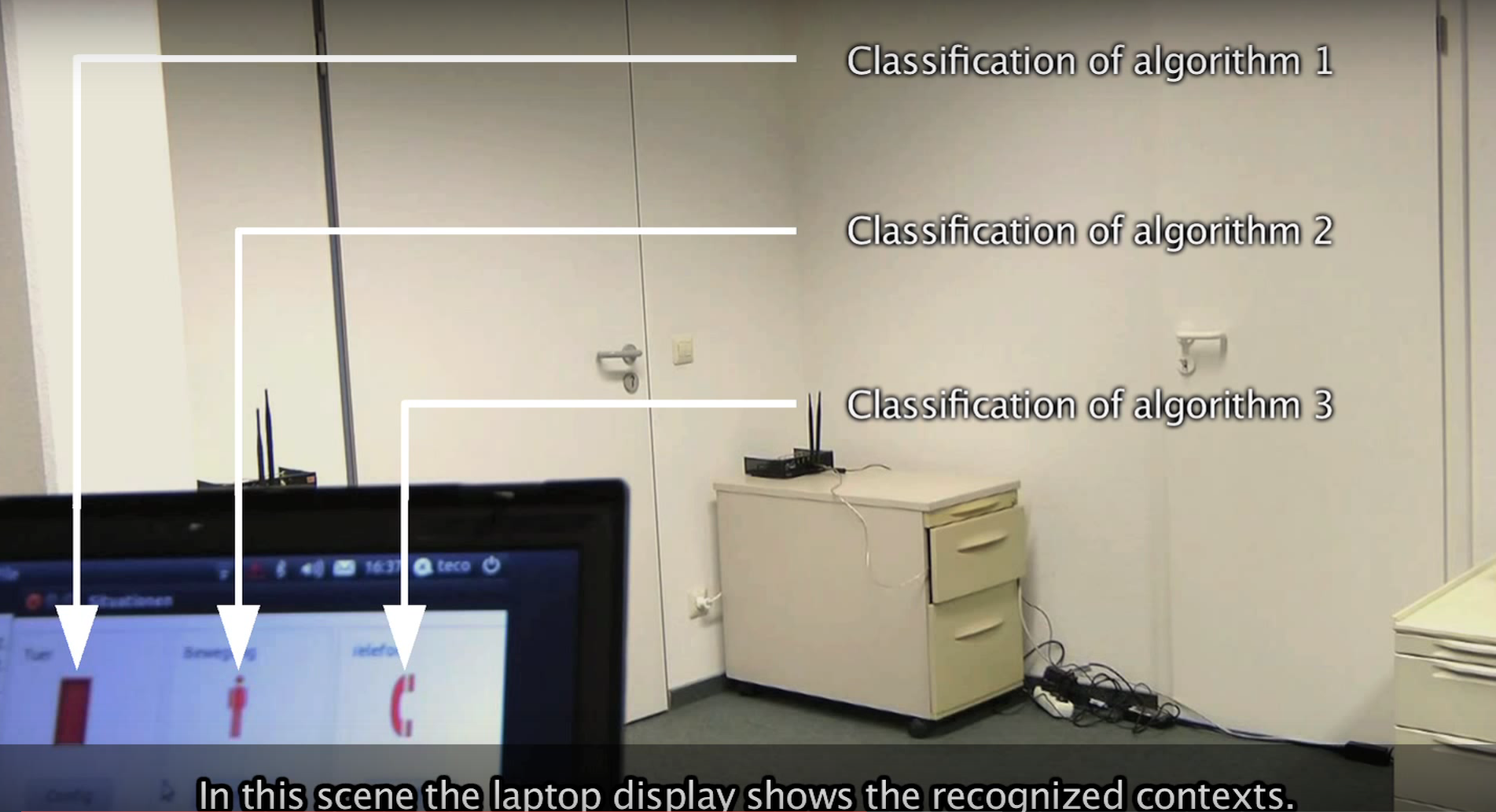

RFexpress! - RF Emotion Recognition in the Wild PassFrame: Generating Image-based Passwords from Egocentric Videos

PassFrame: Generating Image-based Passwords from Egocentric Videos SenseWaves: Radiowaves for context recognition, Pervasive 2011

SenseWaves: Radiowaves for context recognition, Pervasive 2011