Le Ngu Nguyen

- Aalto University

- Maarintie 8

- 02150 Espoo

- Finland

- le.ngu.[lastname][at]aalto.fi

Le is a doctoral student at Aalto University. His research interests include usable security (e.g. audio-based device pairing or image-based user authentication), and machine learning applications in pervasive computing (e.g. sport activity analysis).

Excellent! Next you can

create a new website with this list, or

embed it in an existing web page by copying & pasting

any of the following snippets.

JavaScript

(easiest)

PHP

iFrame

(not recommended)

<script src="https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Nguyen&jsonp=1"></script>

<?php

$contents = file_get_contents("https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Nguyen");

print_r($contents);

?>

<iframe src="https://bibbase.org/show?bib=https://ambientintelligence.aalto.fi/bibtex/LiteraturAll&folding=0&filter=author_short:Nguyen"></iframe>

For more details see the documention.

This is a preview! To use this list on your own web site

or create a new web site from it,

create a free account. The file will be added

and you will be able to edit it in the File Manager.

We will show you instructions once you've created your account.

To the site owner:

Action required! Mendeley is changing its API. In order to keep using Mendeley with BibBase past April 14th, you need to:

- renew the authorization for BibBase on Mendeley, and

- update the BibBase URL in your page the same way you did when you initially set up this page.

2025

(1)

Transient Authentication from First-Person-View Video.

Nguyen, L. N.; Findling, R. D.; Poikela, M.; Zuo, S.; and Sigg, S.

Proc. ACM Interact. Mob. Wearable Ubiquitous Technol., 9(1). March 2025.

![Paper Transient Authentication from First-Person-View Video [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

3 downloads

Paper

doi

link

bibtex

abstract

3 downloads

![Paper Transient Authentication from First-Person-View Video [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

abstract

3 downloads

Paper

doi

link

bibtex

abstract

3 downloads

@article{10.1145/3712266,

author = {Nguyen, Le Ngu and Findling, Rainhard Dieter and Poikela, Maija and Zuo, Si and Sigg, Stephan},

title = {Transient Authentication from First-Person-View Video},

year = {2025},

issue_date = {March 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {9},

number = {1},

url = {https://doi.org/10.1145/3712266},

doi = {10.1145/3712266},

abstract = {We propose PassFrame, a system which utilizes first-person-view videos to generate personalized authentication challenges based on human episodic memory of event sequences. From the recorded videos, relevant (memorable) scenes are selected to form image-based authentication challenges. These authentication challenges are compatible with a variety of screen sizes and input modalities. As the popularity of using wearable cameras in daily life is increasing, PassFrame may serve as a convenient personalized authentication mechanism to screen-based appliances and services of a camera wearer. We evaluated the system in various settings including a spatially constrained scenario with 12 participants and a deployment on smartphones with 16 participants and more than 9 hours continuous video per participant. The authentication challenge completion time ranged from 2.1 to 9.7 seconds (average: 6 sec), which could facilitate a secure yet usable configuration of three consecutive challenges for each login. We investigated different versions of the challenges to obfuscate potential privacy leakage or ethical concerns with 27 participants. We also assessed the authentication schemes in the presence of informed adversaries, such as friends, colleagues or spouses and were able to detect attacks from diverging login behaviour.},

journal = {Proc. ACM Interact. Mob. Wearable Ubiquitous Technol.},

month = mar,

articleno = {14},

numpages = {31},

keywords = {Always-fresh challenge, First-person-view video, Shoulder-surfing resistance, Transient authentication challenge, Usable security, User authentication},

group = {ambience}}

%%% 2024 %%%

We propose PassFrame, a system which utilizes first-person-view videos to generate personalized authentication challenges based on human episodic memory of event sequences. From the recorded videos, relevant (memorable) scenes are selected to form image-based authentication challenges. These authentication challenges are compatible with a variety of screen sizes and input modalities. As the popularity of using wearable cameras in daily life is increasing, PassFrame may serve as a convenient personalized authentication mechanism to screen-based appliances and services of a camera wearer. We evaluated the system in various settings including a spatially constrained scenario with 12 participants and a deployment on smartphones with 16 participants and more than 9 hours continuous video per participant. The authentication challenge completion time ranged from 2.1 to 9.7 seconds (average: 6 sec), which could facilitate a secure yet usable configuration of three consecutive challenges for each login. We investigated different versions of the challenges to obfuscate potential privacy leakage or ethical concerns with 27 participants. We also assessed the authentication schemes in the presence of informed adversaries, such as friends, colleagues or spouses and were able to detect attacks from diverging login behaviour.

2021

(4)

Intuitive Privacy from Acoustic Reach: A Case for Networked Voice User-Interfaces.

Bäckström, T.; Das, S.; Zarazaga, P. P.; Fischer, J.; Findling, R. D.; Sigg, S.; and Nguyen, L. N.

In Proc. 2021 ISCA Symposium on Security and Privacy in Speech Communication, pages 57–61, 2021.

link bibtex

link bibtex

@inproceedings{backstrom2021intuitive,

title={Intuitive Privacy from Acoustic Reach: A Case for Networked Voice User-Interfaces},

author={B{\"a}ckstr{\"o}m, Tom and Das, Sneha and Zarazaga, Pablo P{\'e}rez and Fischer, Johannes and Findling, Rainhard Dieter and Sigg, Stephan and Nguyen, Le Ngu},

booktitle={Proc. 2021 ISCA Symposium on Security and Privacy in Speech Communication},

pages={57--61},

year={2021},

group={ambience}

}

Camouflage learning. Feature value obscuring ambient intelligence for constrained devices.

Nguyen, L. N.; Sigg, S.; Lietzen, J.; Findling, R. D.; and Ruttik, K.

IEEE Transactions on Mobile Computing,1-17. 2021.

link bibtex abstract

link bibtex abstract

@article{Le_2021_TMC,

author={Le Ngu Nguyen and Stephan Sigg and Jari Lietzen and Rainhard Dieter Findling and Kalle Ruttik},

journal={IEEE Transactions on Mobile Computing},

title={Camouflage learning. Feature value obscuring ambient intelligence for constrained devices},

year={2021},

abstract={Ambient intelligence demands collaboration schemes for distributed constrained devices which are not only highly energy efficient in distributed sensing, processing and communication, but which also respect data privacy. Traditional algorithms for distributed processing suffer in Ambient intelligence domains either from limited data privacy, or from their excessive processing demands for constrained distributed devices.

In this paper, we present Camouflage learning, a distributed machine learning scheme that obscures the trained model via probabilistic collaboration using physical-layer computation offloading and demonstrate the feasibility of the approach on backscatter communication prototypes and in comparison with Federated learning. We show that Camouflage learning is more energy efficient than traditional schemes and that it requires less communication overhead while reducing the computation load through physical-layer computation offloading. The scheme is synchronization-agnostic and thus appropriate for sharply constrained, synchronization-incapable devices. We demonstrate model training and inference on four distinct datasets and investigate the performance of the scheme with respect to communication range, impact of challenging communication environments, power consumption, and the backscatter hardware prototype.

},

issue_date = {July 2021},

publisher = {IEEE},

volume = { },

number = { },

pages = {1-17},

group = {ambience},

project = {abacus}

}

Ambient intelligence demands collaboration schemes for distributed constrained devices which are not only highly energy efficient in distributed sensing, processing and communication, but which also respect data privacy. Traditional algorithms for distributed processing suffer in Ambient intelligence domains either from limited data privacy, or from their excessive processing demands for constrained distributed devices. In this paper, we present Camouflage learning, a distributed machine learning scheme that obscures the trained model via probabilistic collaboration using physical-layer computation offloading and demonstrate the feasibility of the approach on backscatter communication prototypes and in comparison with Federated learning. We show that Camouflage learning is more energy efficient than traditional schemes and that it requires less communication overhead while reducing the computation load through physical-layer computation offloading. The scheme is synchronization-agnostic and thus appropriate for sharply constrained, synchronization-incapable devices. We demonstrate model training and inference on four distinct datasets and investigate the performance of the scheme with respect to communication range, impact of challenging communication environments, power consumption, and the backscatter hardware prototype.

Camouflage Learning.

Sigg, S.; Nguyen, L. N.; and Ma, J.

In The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct, 2021.

link bibtex abstract

link bibtex abstract

@inproceedings{Sigg2020Camouflage,

title={Camouflage Learning},

author={Stephan Sigg and Le Ngu Nguyen and Jing Ma},

booktitle={The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), adjunct},

year={2021},

abstract={Federated learning has been proposed as a concept for distributed machine learning which enforces privacy by avoiding sharing private data with a coordinator or distributed nodes. Instead of gathering datasets to a central server for model training in traditional machine learning, in federated learning, model updates are computed locally at distributed devices and merged at a coordinator. However, information on local data might be leaked through the model updates. We propose Camouflage learning, a distributed machine learning scheme that distributes both the data and the model. Neither the distributed devices nor the coordinator is at any point in time in possession of the complete model. Furthermore, data and model are obfuscated during distributed model inference and distributed model training. Camouflage learning can be implemented with various Machine learning schemes.

},

group = {ambience},

project = {radiosense, abacus}

}

Federated learning has been proposed as a concept for distributed machine learning which enforces privacy by avoiding sharing private data with a coordinator or distributed nodes. Instead of gathering datasets to a central server for model training in traditional machine learning, in federated learning, model updates are computed locally at distributed devices and merged at a coordinator. However, information on local data might be leaked through the model updates. We propose Camouflage learning, a distributed machine learning scheme that distributes both the data and the model. Neither the distributed devices nor the coordinator is at any point in time in possession of the complete model. Furthermore, data and model are obfuscated during distributed model inference and distributed model training. Camouflage learning can be implemented with various Machine learning schemes.

Towards battery-less RF sensing.

Kodali, M.; Nguyen, L. N.; and Sigg, S.

In The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), WiP, 2021.

link bibtex abstract

link bibtex abstract

@inproceedings{Manila2020BatteryLess,

title={Towards battery-less RF sensing},

author={Manila Kodali and Le Ngu Nguyen and Stephan Sigg},

booktitle={The 19th International Conference on Pervasive Computing and Communications (PerCom 2021), WiP},

year={2021},

abstract={Recent work has demonstrated the use of the radio interface as a sensing modality for gestures, activities and situational perception. The field generally moves towards larger bandwidths, multiple antennas, and higher, mmWave frequency domains, which allow for the recognition of minute movements. We envision another set of applications for RF sensing: battery-less autonomous sensing devices. In this work, we investigate transceiver-less passive RF-sensors which are excited by the fluctuation of the received power over the wireless channel. In particular, we demonstrate the use of battery-less RF-sensing for applications of on-body gesture recognition integrated into smart garment, as well as the integration of such sensing capabilities into smart surfaces.

},

group = {ambience},

project = {radiosense,abacus}

}

Recent work has demonstrated the use of the radio interface as a sensing modality for gestures, activities and situational perception. The field generally moves towards larger bandwidths, multiple antennas, and higher, mmWave frequency domains, which allow for the recognition of minute movements. We envision another set of applications for RF sensing: battery-less autonomous sensing devices. In this work, we investigate transceiver-less passive RF-sensors which are excited by the fluctuation of the received power over the wireless channel. In particular, we demonstrate the use of battery-less RF-sensing for applications of on-body gesture recognition integrated into smart garment, as well as the integration of such sensing capabilities into smart surfaces.

2020

(3)

Security from Implicit Information.

Nguyen, L. N.

Ph.D. Thesis, Aalto University, September 2020.

![paper Security from Implicit Information [link]](//bibbase.org/img/filetypes/link.svg) paper

link

bibtex

abstract

9 downloads

paper

link

bibtex

abstract

9 downloads

![paper Security from Implicit Information [link]](http://bibbase.org/img/filetypes/link.svg) paper

link

bibtex

abstract

9 downloads

paper

link

bibtex

abstract

9 downloads

@PhDThesis{LeThesis2020,

author = "Le Ngu Nguyen",

title = "Security from Implicit Information",

school = "Aalto University",

year = "2020",

month = "September",

isbn = "978-952-64-0013-6",

url_Paper ={https://aaltodoc.aalto.fi/handle/123456789/46392},

abstract = {We present novel security mechanisms using implicit information extracted from physiological, behavioural, and ambient data. These mechanisms are implemented with reference to device-to-user and inter-device relationships, including: user authentication with transient image-based passwords, device-to-device secure connection initialization based on vocal commands, collaborative inference over the communication channel, and continuous on-body device pairing.

Authentication methods based on passwords require users to explicitly set their passwords and change them regularly. We introduce a method to generate always-fresh authentication challenges from videos collected by wearable cameras. We implement two password formats that expect users to arrange or select images according to their chronological information.

Radio waves are mainly used for data transmission. We implement function computation over the wireless signals to perform collaborative inference. We encode information into burst sequences in such a way that arithmetic functions can be computed using the interference. Hence, data is hidden inside the wireless signals and implicitly aggregated. Our algorithms allow us to train and deploy a classifier efficiently with the support of minimal backscatter devices.

To initialize a connection between a personal device (e.g. smart-phone) and shared appliances (e.g. smart-screens), users are required to explicitly ask for connection information including device identities and PIN codes. We propose to leverage natural vocal commands to select shared appliance types and generate secure communication keys from the audio implicitly. We perform experiments to verify that device proximity defined by audio fingerprints can restrict the range of device-to-device communication.

PIN codes in device pairing must be manually entered or verified by users. This is inconvenient in scenarios when pairing is performed frequently or devices have limited user interfaces. Our methods generate secure pairing keys for on-body devices continuously from sensor data. Our mechanisms automatically disconnect the devices when they leave the user's body. To cover all human activities, we leverage gait in human ambulatory actions and heartbeat in resting postures.},

group = {ambience},

project = {abacus}}

We present novel security mechanisms using implicit information extracted from physiological, behavioural, and ambient data. These mechanisms are implemented with reference to device-to-user and inter-device relationships, including: user authentication with transient image-based passwords, device-to-device secure connection initialization based on vocal commands, collaborative inference over the communication channel, and continuous on-body device pairing. Authentication methods based on passwords require users to explicitly set their passwords and change them regularly. We introduce a method to generate always-fresh authentication challenges from videos collected by wearable cameras. We implement two password formats that expect users to arrange or select images according to their chronological information. Radio waves are mainly used for data transmission. We implement function computation over the wireless signals to perform collaborative inference. We encode information into burst sequences in such a way that arithmetic functions can be computed using the interference. Hence, data is hidden inside the wireless signals and implicitly aggregated. Our algorithms allow us to train and deploy a classifier efficiently with the support of minimal backscatter devices. To initialize a connection between a personal device (e.g. smart-phone) and shared appliances (e.g. smart-screens), users are required to explicitly ask for connection information including device identities and PIN codes. We propose to leverage natural vocal commands to select shared appliance types and generate secure communication keys from the audio implicitly. We perform experiments to verify that device proximity defined by audio fingerprints can restrict the range of device-to-device communication. PIN codes in device pairing must be manually entered or verified by users. This is inconvenient in scenarios when pairing is performed frequently or devices have limited user interfaces. Our methods generate secure pairing keys for on-body devices continuously from sensor data. Our mechanisms automatically disconnect the devices when they leave the user's body. To cover all human activities, we leverage gait in human ambulatory actions and heartbeat in resting postures.

Analysing Ballistocardiography for Pervasive Healthcare.

Hytönen, R.; Tshala, A.; Schreier, J.; Holopainen, M.; Forsman, A.; Oksanen, M.; Findling, R.; Nguyen, L. N.; Jähne-Raden, N.; and Sigg, S.

In 16th International Conference on Mobility, Sensing and Networking (MSN 2020) , 2020.

link bibtex

link bibtex

@inproceedings{hytonen2020BCG,

title={Analysing Ballistocardiography for Pervasive Healthcare},

author={Roni Hytönen and Alison Tshala and Jan Schreier and Melissa Holopainen and Aada Forsman and Minna Oksanen and Rainhard Findling and Le Ngu Nguyen and Nico Jähne-Raden and Stephan Sigg},

booktitle={16th International Conference on Mobility, Sensing and Networking (MSN 2020) },

year={2020},

group = {ambience}

}

Provable Consent for Voice User Interfaces.

Sigg, S.; Nguyen, L. N.; Zarazaga, P. P.; and Backstrom, T.

In 18th Annual IEEE International Conference on Pervasive Computing and Communications (PerCom) adjunct, 2020.

PerCom 2020 BEST WIP PAPER

link bibtex abstract

link bibtex abstract

@InProceedings{Sigg_2020_ProvableConsent,

author = {Stephan Sigg and Le Ngu Nguyen and Pablo Perez Zarazaga and Tom Backstrom},

booktitle = {18th Annual IEEE International Conference on Pervasive Computing and Communications (PerCom) adjunct},

title = {Provable Consent for Voice User Interfaces},

year = {2020},

abstract = {The proliferation of acoustic human-computer interaction raises privacy concerns since it allows Voice User Interfaces (VUI) to overhear human speech and to analyze and share content of overheard conversation in cloud datacenters and with third parties. This process is non-transparent regarding when and which audio is recorded, the reach of the speech recording, the information extracted from a recording and the purpose for which it is used. To return control over the use of audio content to the individual who generated it, we promote intuitive privacy for VUIs, featuring a lightweight consent mechanism as well as means of secure verification (proof of consent) for any recorded piece of audio. In particular, through audio fingerprinting and fuzzy cryptography, we establish a trust zone, whose area is implicitly controlled by voice loudness with respect to environmental noise (Signal-to-Noise Ratio (SNR)). Secure keys are exchanged to verify consent on the use of an audio sequence via digital signatures. We performed experiments with different levels of human voice, corresponding to various trust situations (e.g. whispering and group discussion). A second scenario was investigated in which a VUI outside of the trust zone could not obtain the shared secret key.},

note = {PerCom 2020 BEST WIP PAPER},

group = {ambience}

}

The proliferation of acoustic human-computer interaction raises privacy concerns since it allows Voice User Interfaces (VUI) to overhear human speech and to analyze and share content of overheard conversation in cloud datacenters and with third parties. This process is non-transparent regarding when and which audio is recorded, the reach of the speech recording, the information extracted from a recording and the purpose for which it is used. To return control over the use of audio content to the individual who generated it, we promote intuitive privacy for VUIs, featuring a lightweight consent mechanism as well as means of secure verification (proof of consent) for any recorded piece of audio. In particular, through audio fingerprinting and fuzzy cryptography, we establish a trust zone, whose area is implicitly controlled by voice loudness with respect to environmental noise (Signal-to-Noise Ratio (SNR)). Secure keys are exchanged to verify consent on the use of an audio sequence via digital signatures. We performed experiments with different levels of human voice, corresponding to various trust situations (e.g. whispering and group discussion). A second scenario was investigated in which a VUI outside of the trust zone could not obtain the shared secret key.

2019

(5)

Learning a Classification Model over Vertically-Partitioned Healthcare Data.

Nguyen, L. N.; and Sigg, S.

IEEE Multimedia Communications – Frontiers, SI on Social and Mobile Connected Smart Objects. 2019.

link bibtex

link bibtex

@article{Le_2019_multimedia,

author={Le Ngu Nguyen and Stephan Sigg},

journal={IEEE Multimedia Communications -- Frontiers, SI on Social and Mobile Connected Smart Objects},

title={Learning a Classification Model over Vertically-Partitioned Healthcare Data},

year={2019},

project = {abacus},

group = {ambience}}

Closed-Eye Gaze Gestures: Detection and Recognition of Closed-Eye Movements with Cameras in Smart Glasses.

Findling, R. D.; Nguyen, L. N.; and Sigg, S.

In International Work-Conference on Artificial Neural Networks, 2019.

![paper Closed-Eye Gaze Gestures: Detection and Recognition of Closed-Eye Movements with Cameras in Smart Glasses [pdf]](//bibbase.org/img/filetypes/pdf.svg) paper

doi

link

bibtex

abstract

7 downloads

paper

doi

link

bibtex

abstract

7 downloads

![paper Closed-Eye Gaze Gestures: Detection and Recognition of Closed-Eye Movements with Cameras in Smart Glasses [pdf]](http://bibbase.org/img/filetypes/pdf.svg) paper

doi

link

bibtex

abstract

7 downloads

paper

doi

link

bibtex

abstract

7 downloads

@InProceedings{Rainhard_2019_iwann,

author={Rainhard Dieter Findling and Le Ngu Nguyen and Stephan Sigg},

title={Closed-Eye Gaze Gestures: Detection and Recognition of Closed-Eye Movements with Cameras in Smart Glasses},

booktitle={International Work-Conference on Artificial Neural Networks},

year={2019},

doi = {10.1007/978-3-030-20521-8_27},

abstract ={Gaze gestures bear potential for user input with mobile devices, especially smart glasses, due to being always available and hands-free. So far, gaze gesture recognition approaches have utilized open-eye movements only and disregarded closed-eye movements. This paper is a first investigation of the feasibility of detecting and recognizing closed-eye gaze gestures from close-up optical sources, e.g. eye-facing cameras embedded in smart glasses. We propose four different closed-eye gaze gesture protocols, which extend the alphabet of existing open-eye gaze gesture approaches. We further propose a methodology for detecting and extracting the corresponding closed-eye movements with full optical flow, time series processing, and machine learning. In the evaluation of the four protocols we find closed-eye gaze gestures to be detected 82.8%-91.6% of the time, and extracted gestures to be recognized correctly with an accuracy of 92.9%-99.2%.},

url_Paper = {http://ambientintelligence.aalto.fi/findling/pdfs/publications/Findling_19_ClosedEyeGaze.pdf},

project = {hidemygaze},

group = {ambience}}

Gaze gestures bear potential for user input with mobile devices, especially smart glasses, due to being always available and hands-free. So far, gaze gesture recognition approaches have utilized open-eye movements only and disregarded closed-eye movements. This paper is a first investigation of the feasibility of detecting and recognizing closed-eye gaze gestures from close-up optical sources, e.g. eye-facing cameras embedded in smart glasses. We propose four different closed-eye gaze gesture protocols, which extend the alphabet of existing open-eye gaze gesture approaches. We further propose a methodology for detecting and extracting the corresponding closed-eye movements with full optical flow, time series processing, and machine learning. In the evaluation of the four protocols we find closed-eye gaze gestures to be detected 82.8%-91.6% of the time, and extracted gestures to be recognized correctly with an accuracy of 92.9%-99.2%.

Learning with Vertically Partitioned Data, Binary Feedback and Random Parameter update.

Nguyen, L. N.; and Sigg, S.

In Workshop on Hot Topics in Social and Mobile Connected Smart Objects, in conjuction with IEEE International Conference on Computer Communications (INFOCOM), 2019.

link bibtex

link bibtex

@InProceedings{Le_2019_hotsalsa,

author={Le Ngu Nguyen and Stephan Sigg},

title={Learning with Vertically Partitioned Data, Binary Feedback and Random Parameter update},

booktitle={Workshop on Hot Topics in Social and Mobile Connected Smart Objects, in conjuction with IEEE International Conference on Computer Communications (INFOCOM)},

year={2019},

project = {abacus},

group = {ambience}}

Security Properties of Gait for Mobile Device Pairing.

Bruesch, A.; Nguyen, L. N.; Schuermann, D.; Sigg, S.; and Wolf, L.

IEEE Transactions on Mobile Computing. 2019.

doi link bibtex abstract

doi link bibtex abstract

@article{Schuermann_2019_tmc,

author={Arne Bruesch and Le Ngu Nguyen and Dominik Schuermann and Stephan Sigg and Lars Wolf},

journal={IEEE Transactions on Mobile Computing},

title={Security Properties of Gait for Mobile Device Pairing},

year={2019},

abstract = {Gait has been proposed as a feature for mobile device pairing across arbitrary positions on the human body. Results indicate that the correlation in gait-based features across different body locations is sufficient to establish secure device pairing. However, the population size of the studies is limited and powerful attackers with e.g. capability of video recording are not considered. We present a concise discussion of security properties of gait-based pairing schemes including quantization, classification and analysis of attack surfaces, of statistical properties of generated sequences, an entropy analysis, as well as possible threats and security weaknesses. For one of the schemes considered, we present modifications to fix an identified security flaw. As a general limitation of gait-based authentication or pairing systems, we further demonstrate that an adversary with video support can create key sequences that are sufficiently close to on-body generated acceleration sequences to breach gait-based security mechanisms.},

doi = {10.1109/TMC.2019.2897933},

project = {bandana},

group = {ambience}}

Gait has been proposed as a feature for mobile device pairing across arbitrary positions on the human body. Results indicate that the correlation in gait-based features across different body locations is sufficient to establish secure device pairing. However, the population size of the studies is limited and powerful attackers with e.g. capability of video recording are not considered. We present a concise discussion of security properties of gait-based pairing schemes including quantization, classification and analysis of attack surfaces, of statistical properties of generated sequences, an entropy analysis, as well as possible threats and security weaknesses. For one of the schemes considered, we present modifications to fix an identified security flaw. As a general limitation of gait-based authentication or pairing systems, we further demonstrate that an adversary with video support can create key sequences that are sufficiently close to on-body generated acceleration sequences to breach gait-based security mechanisms.

Mobile Brainwaves: On the Interchangeability of Simple Authentication Tasks with Low-Cost, Single-Electrode EEG Devices.

Haukipuro, E.; Kolehmainen, V.; Myllarinen, J.; Remander, S.; Salo, J. T.; Takko, T.; Nguyen, L. N.; Sigg, S.; and Findling, R.

IEICE Transactions, Special issue on Sensing, Wireless Networking, Data Collection, Analysis and Processing Technologies for Ambient Intelligence with Internet of Things. 2019.

![paper Mobile Brainwaves: On the Interchangeability of Simple Authentication Tasks with Low-Cost, Single-Electrode EEG Devices [pdf]](//bibbase.org/img/filetypes/pdf.svg) paper

doi

link

bibtex

abstract

paper

doi

link

bibtex

abstract

![paper Mobile Brainwaves: On the Interchangeability of Simple Authentication Tasks with Low-Cost, Single-Electrode EEG Devices [pdf]](http://bibbase.org/img/filetypes/pdf.svg) paper

doi

link

bibtex

abstract

paper

doi

link

bibtex

abstract

@article{Haukipuro_2019_IEICE,

author={Eeva-Sofia Haukipuro and Ville Kolehmainen and Janne Myllarinen and Sebastian Remander and Janne T. Salo and Tuomas Takko and Le Ngu Nguyen and Stephan Sigg and Rainhard Findling},

journal={IEICE Transactions, Special issue on Sensing, Wireless Networking, Data Collection, Analysis and Processing Technologies for Ambient Intelligence with Internet of Things},

title={Mobile Brainwaves: On the Interchangeability of Simple Authentication Tasks with Low-Cost, Single-Electrode EEG Devices},

year={2019},

url_Paper = {http://ambientintelligence.aalto.fi/findling/pdfs/publications/Haukipuro_19_MobileBrainwavesInterchangeability.pdf},

abstract = {Electroencephalography (EEG) for biometric authentication has received some attention in recent years. In this paper, we explore the effect of three simple EEG related authentication tasks, namely resting, thinking about a picture, and moving a single finger, on mobile, low-cost, single electrode based EEG authentication. We present details of our authentication pipeline, including extracting features from the frequency power spectrum and MFCC, and training a multilayer perceptron classifier for authentication. For our evaluation we record an EEG dataset of 27 test subjects. We use a baseline, task-agnostic, and task-specific evaluation setup to investigate if different tasks can be used in place of each other for authentication. We further evaluate if tasks themselves can be told apart from each other. Evaluation results suggest that tasks differ, hence to some extent are distinguishable, as well as that our authentication approach can work in a task-specific as well as in a task-agnostic manner.},

doi = {10.1587/transcom.2018SEP0016},

group = {ambience}

}

%%% 2018 %%%

Electroencephalography (EEG) for biometric authentication has received some attention in recent years. In this paper, we explore the effect of three simple EEG related authentication tasks, namely resting, thinking about a picture, and moving a single finger, on mobile, low-cost, single electrode based EEG authentication. We present details of our authentication pipeline, including extracting features from the frequency power spectrum and MFCC, and training a multilayer perceptron classifier for authentication. For our evaluation we record an EEG dataset of 27 test subjects. We use a baseline, task-agnostic, and task-specific evaluation setup to investigate if different tasks can be used in place of each other for authentication. We further evaluate if tasks themselves can be told apart from each other. Evaluation results suggest that tasks differ, hence to some extent are distinguishable, as well as that our authentication approach can work in a task-specific as well as in a task-agnostic manner.

2018

(5)

Moves like Jagger: Exploiting variations in instantaneous gait for spontaneous device pairing .

Schürmann, D.; Brüsch, A.; Nguyen, N.; Sigg, S.; and Wolf, L.

Pervasive and Mobile Computing , 47. July 2018.

![paper Moves like Jagger: Exploiting variations in instantaneous gait for spontaneous device pairing [link]](//bibbase.org/img/filetypes/link.svg) paper

doi

link

bibtex

abstract

7 downloads

paper

doi

link

bibtex

abstract

7 downloads

![paper Moves like Jagger: Exploiting variations in instantaneous gait for spontaneous device pairing [link]](http://bibbase.org/img/filetypes/link.svg) paper

doi

link

bibtex

abstract

7 downloads

paper

doi

link

bibtex

abstract

7 downloads

@article{Schuermann_2018_pmc,

title = "Moves like Jagger: Exploiting variations in instantaneous gait for spontaneous device pairing ",

journal = "Pervasive and Mobile Computing ",

year = "2018",

volume = "47",

month = "July",

doi = "https://doi.org/10.1016/j.pmcj.2018.03.006",

url_Paper = {https://authors.elsevier.com/c/1WufU5bwSmo0nk},

author = "Dominik Schürmann and Arne Brüsch and Ngu Nguyen and Stephan Sigg and Lars Wolf",

abstract = "Abstract Seamless device pairing conditioned on the context of use fosters novel application domains and ease of use. Examples are automatic device pairings with objects interacted with, such as instrumented shopping baskets, electronic tourist guides (e.g. tablets), fitness trackers or other fitness equipment. We propose a cryptographically secure spontaneous authentication scheme, BANDANA, that exploits correlation in acceleration sequences from devices worn or carried together by the same person to extract always-fresh secure secrets. On two real world datasets with 15 and 482 subjects, \{BANDANA\} generated fingerprints achieved intra- (50%) and inter-body ( > 75 % ) similarity sufficient for secure key generation via fuzzy cryptography. Using \{BCH\} codes, best results are achieved with 48 bit fingerprints from 12 gait cycles generating 16 bit long keys. Statistical bias of the generated fingerprints has been evaluated as well as vulnerabilities towards relevant attack scenarios. ",

project = {bandana},

group = {ambience}

}

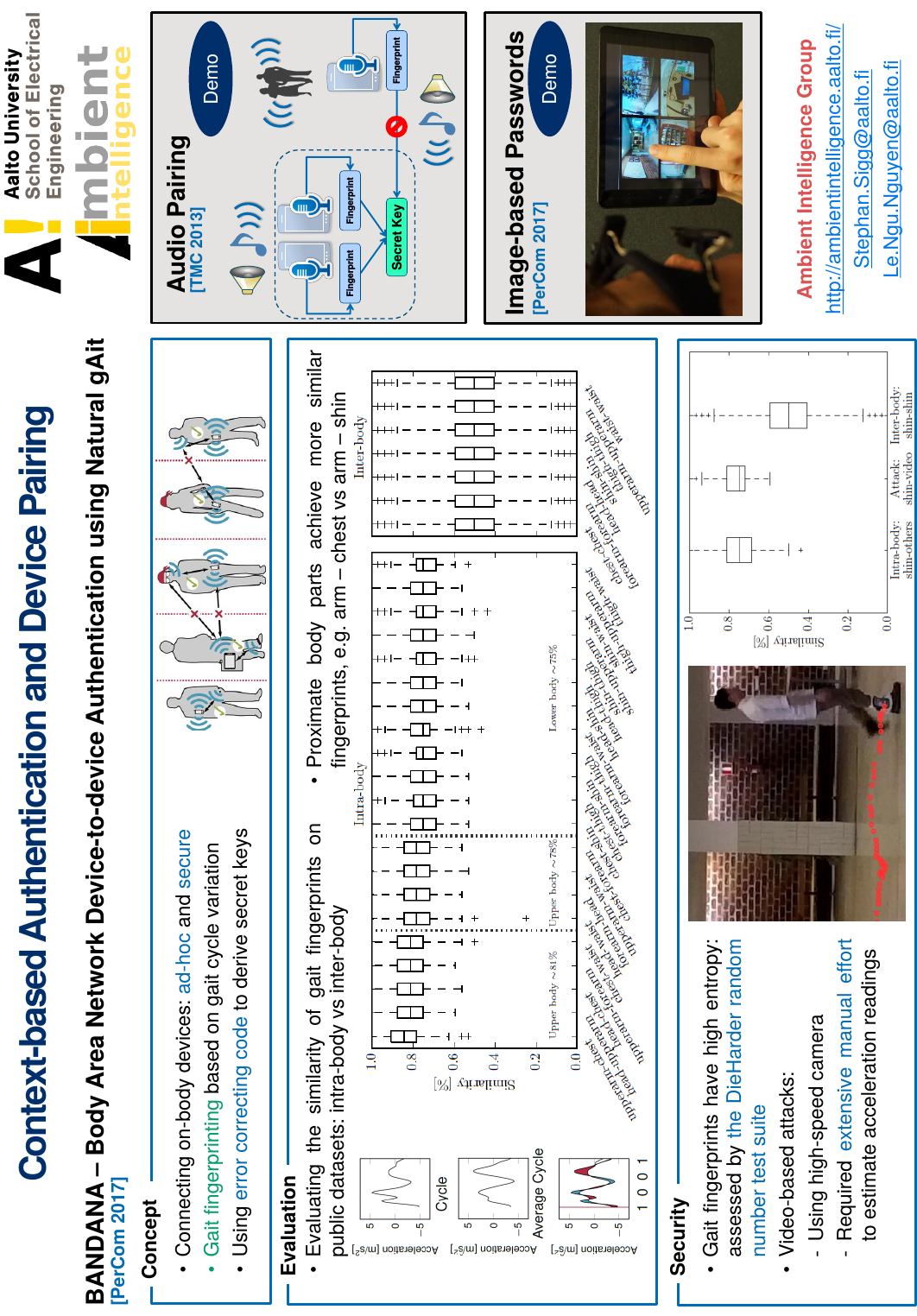

Abstract Seamless device pairing conditioned on the context of use fosters novel application domains and ease of use. Examples are automatic device pairings with objects interacted with, such as instrumented shopping baskets, electronic tourist guides (e.g. tablets), fitness trackers or other fitness equipment. We propose a cryptographically secure spontaneous authentication scheme, BANDANA, that exploits correlation in acceleration sequences from devices worn or carried together by the same person to extract always-fresh secure secrets. On two real world datasets with 15 and 482 subjects, \BANDANA\ generated fingerprints achieved intra- (50%) and inter-body ( > 75 % ) similarity sufficient for secure key generation via fuzzy cryptography. Using \BCH\ codes, best results are achieved with 48 bit fingerprints from 12 gait cycles generating 16 bit long keys. Statistical bias of the generated fingerprints has been evaluated as well as vulnerabilities towards relevant attack scenarios.

Representation Learning for Sensor-based Device Pairing.

Nguyen, L. N.; Sigg, S.; and Kulau, U.

In 2018 IEEE International Conference on Pervasive Computing and Communication (WiP), March 2018.

link bibtex

link bibtex

@INPROCEEDINGS{Le_2018_PerCom,

author={Le Ngu Nguyen and Stephan Sigg and Ulf Kulau},

booktitle={2018 IEEE International Conference on Pervasive Computing and Communication (WiP)},

title={Representation Learning for Sensor-based Device Pairing},

year={2018},

month={March},

group = {ambience}}

Demo of BANDANA – Body Area Network Device-to-device Authentication Using Natural gAit.

Nguyen, L. N.; Kaya, C. Y.; Bruesch, A.; Schuermann, D.; Sigg, S.; and Wolf, L.

In 2018 IEEE International Conference on Pervasive Computing and Communication (Demo), March 2018.

link bibtex

link bibtex

@INPROCEEDINGS{Le_2018_PerComDemo,

author={Le Ngu Nguyen and Caglar Yuce Kaya and Arne Bruesch and Dominik Schuermann and Stephan Sigg and Lars Wolf},

booktitle={2018 IEEE International Conference on Pervasive Computing and Communication (Demo)},

title={Demo of BANDANA -- Body Area Network Device-to-device Authentication Using Natural gAit},

year={2018},

month={March},

project = {bandana},

group = {ambience}}

Secure Context-based Pairing for Unprecedented Devices.

Nguyen, L. N.; and Sigg, S.

In 2018 IEEE International Conference on Pervasive Computing and Communication (adjunct), March 2018.

link bibtex

link bibtex

@INPROCEEDINGS{Le_2018_PerComWS,

author={Le Ngu Nguyen and Stephan Sigg},

booktitle={2018 IEEE International Conference on Pervasive Computing and Communication (adjunct)},

title={Secure Context-based Pairing for Unprecedented Devices},

year={2018},

month={March},

group = {ambience}}

User Authentication based on Personal Image Experiences.

Nguyen, L. N.; and Sigg, S.

In 2018 IEEE International Conference on Pervasive Computing and Communication (adjunct), March 2018.

link bibtex

link bibtex

@INPROCEEDINGS{Le_2018_PerComWS2,

author={Le Ngu Nguyen and Stephan Sigg},

booktitle={2018 IEEE International Conference on Pervasive Computing and Communication (adjunct)},

title={User Authentication based on Personal Image Experiences},

year={2018},

month={March},

group = {ambience}}

2017

(2)

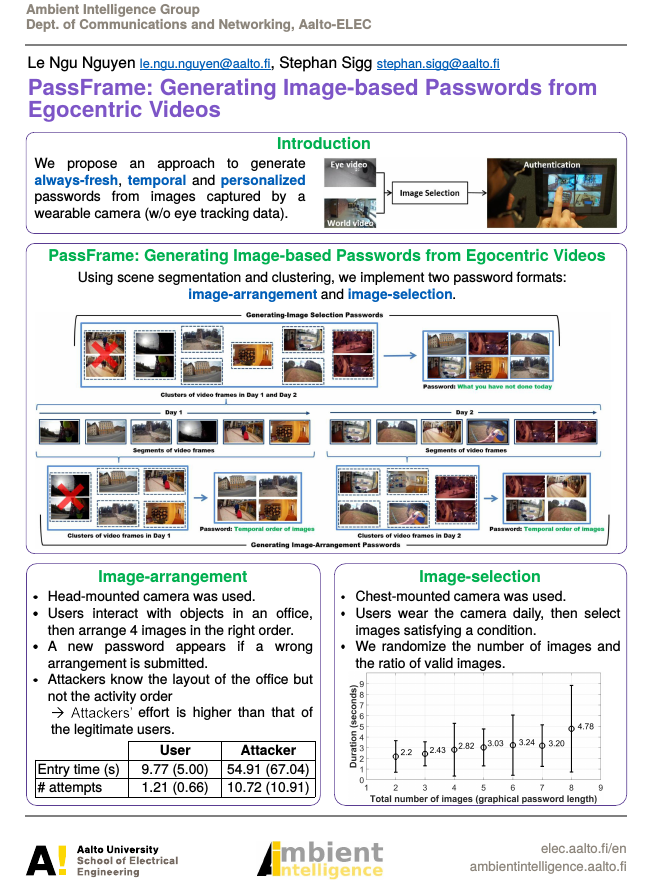

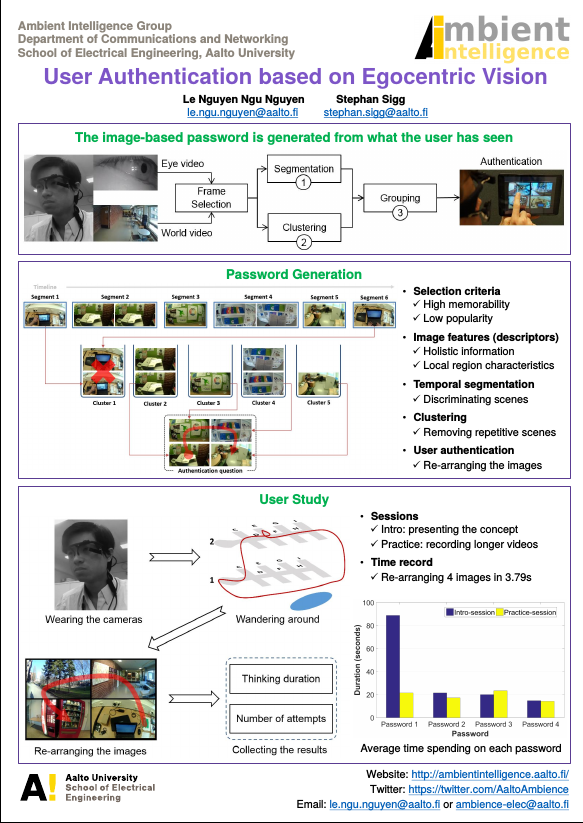

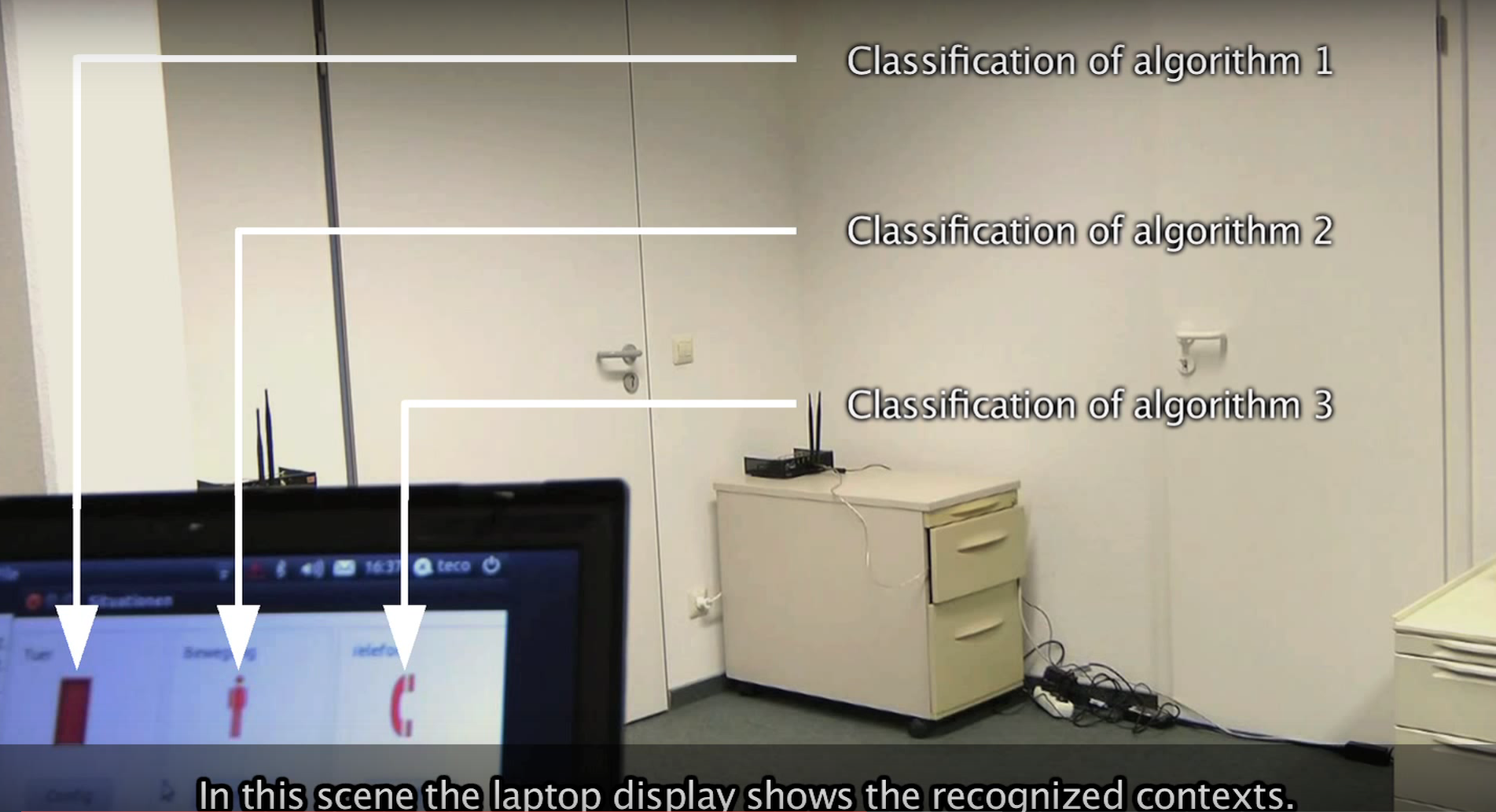

PassFrame: Generating Image-based Passwords from Egocentric Videos.

Nguyen, L. N.; and Sigg, S.

In 2017 IEEE International Conference on Pervasive Computing and Communication (WiP), March 2017.

link bibtex abstract

link bibtex abstract

@INPROCEEDINGS{LeWiP_2017_PerCom,

author={Le Ngu Nguyen and Stephan Sigg},

booktitle={2017 IEEE International Conference on Pervasive Computing and Communication (WiP)},

title={PassFrame: Generating Image-based Passwords from Egocentric Videos},

year={2017},

abstract={Wearable cameras have been widely used not only in sport and public safety but also as life-logging gadgets. They record diverse visual information that is meaningful to the users. In this paper, we analyse first-person-view videos to develop a personalized user authentication mechanism. Our proposed algorithm generates provisional passwords which benefit a variety of purposes such as normally unlocking a mobile device or fallback authentication. First, representative frames are extracted from the egocentric videos. Then, they are split into distinguishable segments before a clustering procedure is applied to discard repetitive scenes. The whole process aims to retain memorable images to form the authentication challenges. We integrate eye tracking data to select informative sequences of video frames and suggest another alternative method if an eye-facing camera is not available. To evaluate our system, we perform the experiments in different settings including object-interaction activities and traveling contexts. Even though our mechanism produces variable passwords, the log-in effort is comparable with approaches based on static challenges.

},

%doi={10.1109/PERCOMW.2016.7457119},

month={March},

project={passframe},

group = {ambience}}

Wearable cameras have been widely used not only in sport and public safety but also as life-logging gadgets. They record diverse visual information that is meaningful to the users. In this paper, we analyse first-person-view videos to develop a personalized user authentication mechanism. Our proposed algorithm generates provisional passwords which benefit a variety of purposes such as normally unlocking a mobile device or fallback authentication. First, representative frames are extracted from the egocentric videos. Then, they are split into distinguishable segments before a clustering procedure is applied to discard repetitive scenes. The whole process aims to retain memorable images to form the authentication challenges. We integrate eye tracking data to select informative sequences of video frames and suggest another alternative method if an eye-facing camera is not available. To evaluate our system, we perform the experiments in different settings including object-interaction activities and traveling contexts. Even though our mechanism produces variable passwords, the log-in effort is comparable with approaches based on static challenges.

Demo of PassFrame: Generating Image-based Passwords from Egocentric Videos.

Nguyen, L. N.; and Sigg, S.

In 2017 IEEE International Conference on Pervasive Computing and Communication (demo), March 2017.

link bibtex abstract

link bibtex abstract

@INPROCEEDINGS{LeDemo_2017_PerCom,

author={Le Ngu Nguyen and Stephan Sigg},

booktitle={2017 IEEE International Conference on Pervasive Computing and Communication (demo)},

title={Demo of PassFrame: Generating Image-based Passwords from Egocentric Videos},

year={2017},

abstract={We demonstrate a personalized user authentication mechanism based on first-person-view videos. Our proposed algorithm forms temporary image-based authentication challenges which benefit a variety of purposes such as unlocking a mobile device or fallback authentication. First, representative frames are extracted from the egocentric videos. Then, they are split into distinguishable segments before repetitive scenes are discarded through a clustering procedure. We integrate eye tracking data to select informative sequences of video frames and suggest an alternative method based on image quality. For evaluation, we perform experiments in different settings including object-interaction activities and traveling contexts. We assessed the authentication scheme in the presence of an informed attacker and observed that the entry time is significantly higher than that of the legitimate user.

},

%doi={10.1109/PERCOMW.2016.7457119},

month={March},

project={passframe},

group = {ambience}}

We demonstrate a personalized user authentication mechanism based on first-person-view videos. Our proposed algorithm forms temporary image-based authentication challenges which benefit a variety of purposes such as unlocking a mobile device or fallback authentication. First, representative frames are extracted from the egocentric videos. Then, they are split into distinguishable segments before repetitive scenes are discarded through a clustering procedure. We integrate eye tracking data to select informative sequences of video frames and suggest an alternative method based on image quality. For evaluation, we perform experiments in different settings including object-interaction activities and traveling contexts. We assessed the authentication scheme in the presence of an informed attacker and observed that the entry time is significantly higher than that of the legitimate user.

2015

(2)

Basketball Activity Recognition Using Wearable Inertial Measurement Units.

Nguyen, L. N.; Rodríguez-Martín, D.; Català, A.; Pérez-López, C.; Samà, A.; and Cavallaro, A.

In Proceedings of the XVI International Conference on Human Computer Interaction, of Interacci\ón '15, 2015.

doi link bibtex

doi link bibtex

@inproceedings{Nguyen:2015:BAR:2829875.2829930,

author = {Le Ngu Nguyen and Rodr\'{\i}guez-Mart\'{\i}n, Daniel and Catal\`{a}, Andreu and P{\'e}rez-L\'{o}pez, Carlos and Sam\`{a}, Albert and Cavallaro, Andrea},

title = {Basketball Activity Recognition Using Wearable Inertial Measurement Units},

booktitle = {Proceedings of the XVI International Conference on Human Computer Interaction},

series = {Interacci\ón '15},

year = {2015},

isbn = {978-1-4503-3463-1},

articleno = {60},

numpages = {6},

doi = {10.1145/2829875.2829930},

group = {ambience}}

Feature Learning for Interaction Activity Recognition in RGBD Videos.

Nguyen, L. N.

CoRR, abs/1508.02246. 2015.

![Paper Feature Learning for Interaction Activity Recognition in RGBD Videos [link]](//bibbase.org/img/filetypes/link.svg) Paper

link

bibtex

Paper

link

bibtex

![Paper Feature Learning for Interaction Activity Recognition in RGBD Videos [link]](http://bibbase.org/img/filetypes/link.svg) Paper

link

bibtex

Paper

link

bibtex

@article{DBLP:journals/corr/Nguyen15b,

author = {Le Ngu Nguyen},

title = {Feature Learning for Interaction Activity Recognition in {RGBD} Videos},

journal = {CoRR},

volume = {abs/1508.02246},

year = {2015},

url = {http://arxiv.org/abs/1508.02246},

timestamp = {Tue, 01 Sep 2015 14:42:40 +0200},

biburl = {http://dblp.uni-trier.de/rec/bib/journals/corr/Nguyen15b},

bibsource = {dblp computer science bibliography, http://dblp.org},

group = {ambience}}

2014

(1)

.

Quach, Q.; Nguyen, L. N.; and Dinh, T.

Secure Authentication for Mobile Devices Based on Acoustic Background Fingerprint, pages 375–387. Huynh, N. V.; Denoeux, T.; Tran, H. D.; Le, C. A.; and Pham, B. S., editor(s). Springer International Publishing, Cham, 2014.

![Paper Secure Authentication for Mobile Devices Based on Acoustic Background Fingerprint [link]](//bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

Paper

doi

link

bibtex

![Paper Secure Authentication for Mobile Devices Based on Acoustic Background Fingerprint [link]](http://bibbase.org/img/filetypes/link.svg) Paper

doi

link

bibtex

Paper

doi

link

bibtex

@Inbook{Quach2014,

author="Quan Quach and Le Ngu Nguyen and Tien Dinh",

editor="Huynh, Nam Van and Denoeux, Thierry and Tran, Hung Dang and Le, Cuong Anh and Pham, Bao Son",

title="Secure Authentication for Mobile Devices Based on Acoustic Background Fingerprint",

bookTitle="Knowledge and Systems Engineering: Proceedings of the Fifth International Conference KSE 2013, Volume 1",

year="2014",

publisher="Springer International Publishing",

address="Cham",

pages="375--387",

isbn="978-3-319-02741-8",

doi="10.1007/978-3-319-02741-8_32",

url="http://dx.doi.org/10.1007/978-3-319-02741-8_32",

group = {ambience}}

2012

(3)

AdhocPairing: Spontaneous audio based secure device pairing for Android mobile devices.

Sigg, S.; Nguyen, L. N.; Huynh, A.; and Ji, Y.

In Proceedings of the 4th International Workshop on Security and Privacy in Spontaneous Interaction and Mobile Phone Use, in conjunction with Pervasive 2012, June 2012.

link bibtex

link bibtex

@InProceedings{ Cryptography_Sigg_2012b,

author = "Stephan Sigg and Le Ngu Nguyen and An Huynh and Yusheng Ji",

title = "AdhocPairing: Spontaneous audio based secure device pairing for Android mobile devices",

booktitle = "Proceedings of the 4th International Workshop on Security and Privacy in Spontaneous Interaction and Mobile Phone Use, in conjunction with Pervasive 2012",

year = "2012",

month = June,

ibrauthors = "sigg",

ibrgroups = "dus",

group = {ambience}}

Using ambient audio in secure mobile phone communication.

Nguyen, L. N.; Sigg, S.; Huynh, A.; and Ji, Y.

In Proceedings of the 10th IEEE International Conference on Pervasive Computing and Communications (PerCom), WiP session, March 2012.

link bibtex

link bibtex

@InProceedings{ Cryptography_Nguyen_2012,

author = "Le Ngu Nguyen and Stephan Sigg and An Huynh and Yusheng Ji",

title = "Using ambient audio in secure mobile phone communication",

booktitle = "Proceedings of the 10th IEEE International Conference on Pervasive Computing and Communications (PerCom), WiP session",

year = "2012",

month = March,

group = {ambience}}

Pattern-based Alignment of Audio Data for Ad-hoc Secure Device Pairing.

Nguyen, L. N.; Sigg, S.; Huynh, A.; and Ji, Y.

In Proceedings of the 16th annual International Symposium on Wearable Computers (ISWC), June 2012.

link bibtex

link bibtex

@InProceedings{ Cryptography_Nguyen_2012-2,

author = "Le Ngu Nguyen and Stephan Sigg and An Huynh and Yusheng Ji",

title = "Pattern-based Alignment of Audio Data for Ad-hoc Secure Device Pairing",

booktitle = "Proceedings of the 16th annual International Symposium on Wearable Computers (ISWC)",

year = "2012",

month = June,

ibrauthors = "sigg",

ibrgroups = "dus",

group = {ambience}}

Beamsteering for Training-free Counting of

Multiple Humans Performing Distinct Activities

Beamsteering for Training-free Counting of

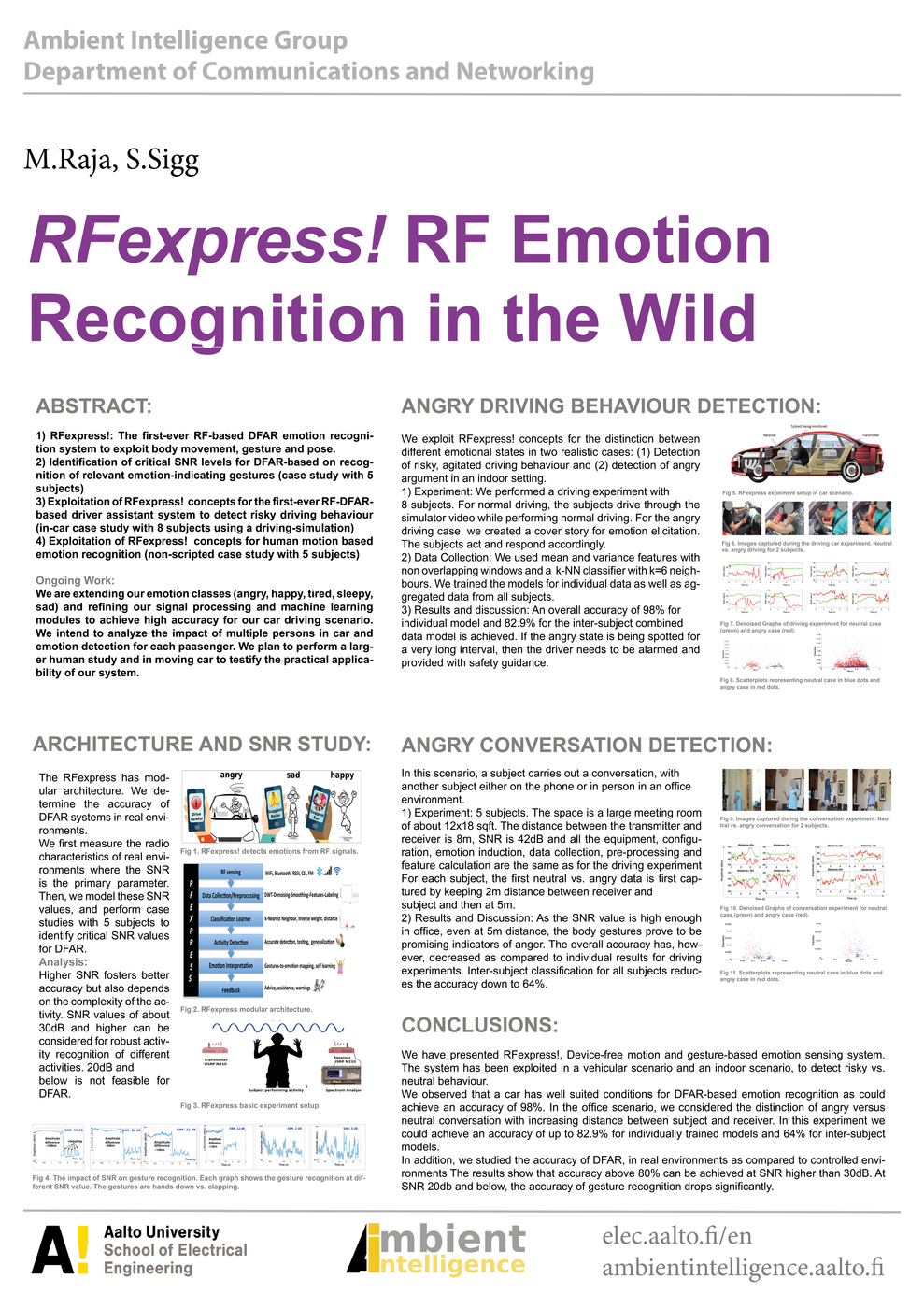

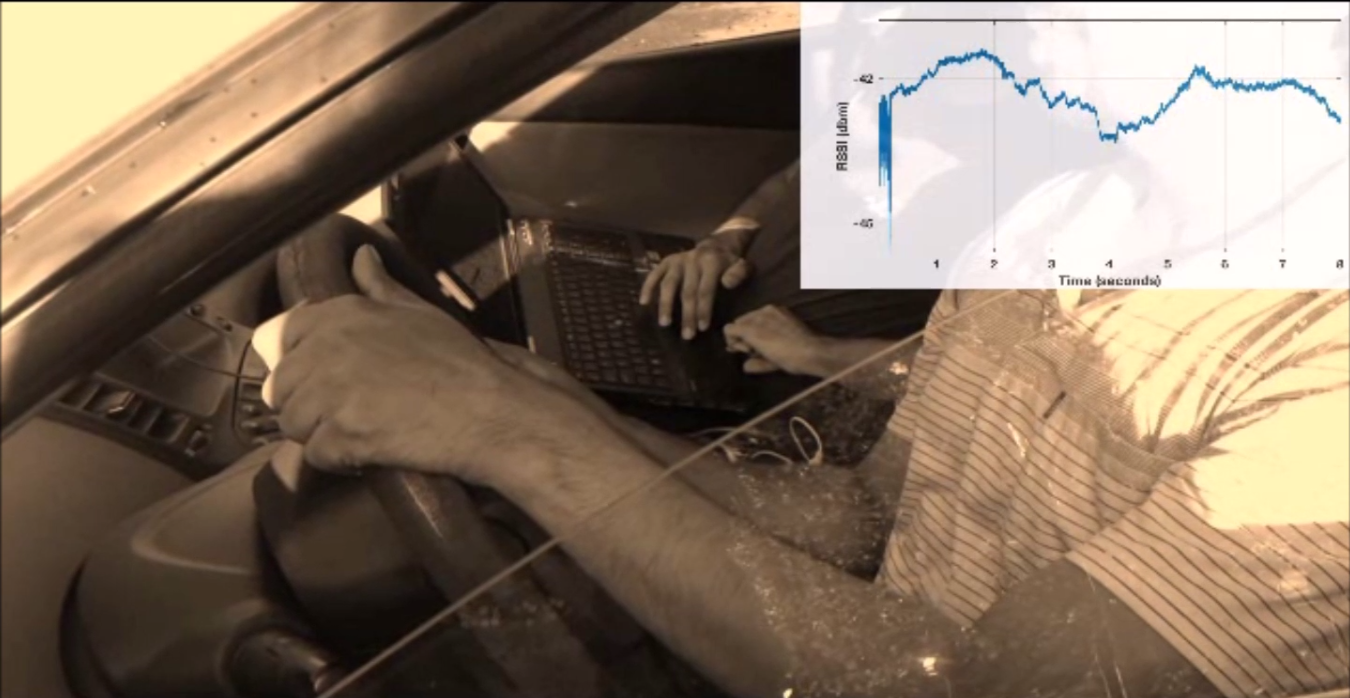

Multiple Humans Performing Distinct Activities RFexpress! - RF Emotion Recognition in the Wild

RFexpress! - RF Emotion Recognition in the Wild PassFrame: Generating Image-based Passwords from Egocentric Videos

PassFrame: Generating Image-based Passwords from Egocentric Videos SenseWaves: Radiowaves for context recognition, Pervasive 2011

SenseWaves: Radiowaves for context recognition, Pervasive 2011