Authentication from Wearable Cameras

Personal devices (e.g. laptops, tablets, and mobile phones) are conventional in daily life and have the ability to store users' essential data. They require secure but convenient user authentication schemes. Graphical passwords, such as the 9-dot log-in screen on the Android platform, have been gaining more popularity than classical text-based approach due to their convenience on the touch-based interface. Nevertheless, pattern-based authentication is vulnerable to shoulder surfing attacks or smudge attacks (reconstructing the secret pattern from images of the smartphone screen). Changing graphical patterns frequently and multi-factor authentication are countermeasures for this attack strategy but they impose higher burden on the users. Although biometric security mechanisms are increasingly applied for authentication on mobile devices, they are naturally limited and difficult to substitute in case of being lost or compromised.

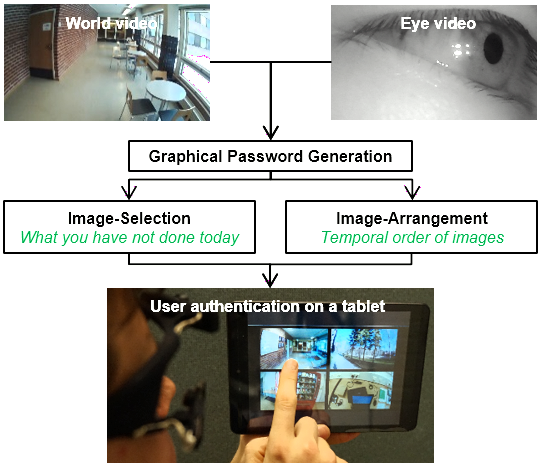

We propose to generate always-fresh and personalized authentication challenges from images captured by a wearable camera. A set of photos are displayed on the screen and the legitimate user exploits her implicit knowledge on the chronological order of images to log-in. we analyse first-person-view videos to facilitate a personalized password generation mechanism. Our proposed algorithm generates provisional image-based passwords which benefit a variety of purposes such as unlocking a mobile device or fallback authentication. First, representative frames are extracted from the egocentric videos. Then, they are split into distinguishable segments before a clustering procedure is applied to discard repetitive scenes. The whole process aims to retain memorable images to form the authentication challenges. We integrate eye tracking data to select informative sequences of video frames and suggest a blurriness-based method if an eye-facing camera is not available.

User Study

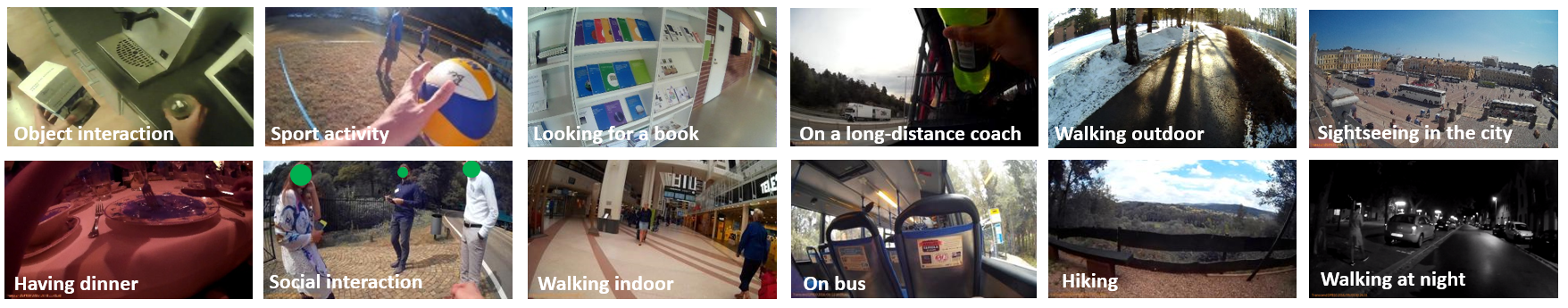

We employed two wearable cameras in our user studies. The devices were mounted on two positions: head and chest. The wearable cameras allow users to continuously capture videos or images of the environment from the first-person perspective (or egocentric view). The captured photos contain diverse details due to the subjects' activities, body movements, locations, weather, light intensity, and scene appearance. We presented results in two scenarios: object-interaction setting in an office and daily condition study in which subjects wore the camera during several days. The average entry time of the former is 9.77 seconds while that of the latter is 3.10 seconds, which is comparable with static password schemes.

Object-interaction Scenario

We prepared a room for object-interaction activities.

The setting was challenging to our system because all activities occurred at the same location.

Thus, the object appearance and interaction are the only visual cues that assist our video analysis algorithm.

The room in this experiment was a usual workplace in which there are basic furniture and office equipment.

The room is new to all seven participants (four females, two with glasses) in the experiment.

The subjects performed the following activities in any arbitrary order, then solve passwords generated from the recorded egocentric videos.

The activities include: reading a book, working on a laptop, writing on paper, writing on a board, viewing a poster, talking to a person, using a smartphone, unboxing an item, playing a board game, and using a paper-cutter.

The objects are put on the desks (laptop, paper, gameboard, and paper-cutter), hung on the wall (poster and board), or in the subject's pocket (smartphone).

We prepared a room for object-interaction activities.

The setting was challenging to our system because all activities occurred at the same location.

Thus, the object appearance and interaction are the only visual cues that assist our video analysis algorithm.

The room in this experiment was a usual workplace in which there are basic furniture and office equipment.

The room is new to all seven participants (four females, two with glasses) in the experiment.

The subjects performed the following activities in any arbitrary order, then solve passwords generated from the recorded egocentric videos.

The activities include: reading a book, working on a laptop, writing on paper, writing on a board, viewing a poster, talking to a person, using a smartphone, unboxing an item, playing a board game, and using a paper-cutter.

The objects are put on the desks (laptop, paper, gameboard, and paper-cutter), hung on the wall (poster and board), or in the subject's pocket (smartphone).

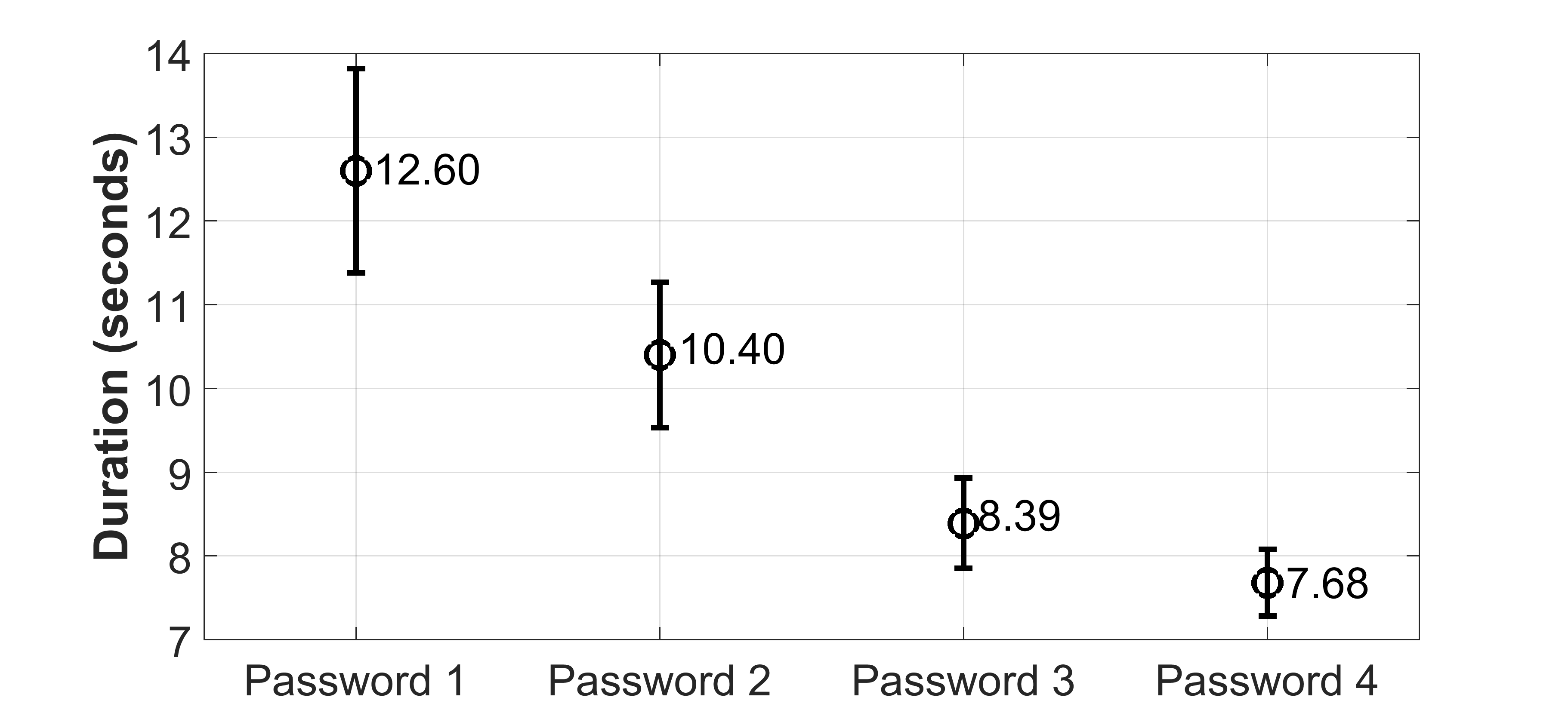

We used the head-mounted camera in this scenario.

The image-arrangement scheme was investigated in this setting.

To assess the users' effort, we asked each subject to answer a sequence of different image-based passwords.

To make the authentication scheme more secure, a new password is generated whenever the user forms a wrong sequence of images in the chronological order.

When all four passwords were taken into account, each subject spent 9.77 seconds with 1.21 attempts to solve a password.

Daily Condition Scenario

To evaluate our system in the long-term context, we analysed two scenarios in which the subjects wore the cameras for several days and answered the authentication challenges.

We used the chest-mounted camera in this scenario.

In this study, we focus on the image-selection authentication scheme.

To evaluate our system in the long-term context, we analysed two scenarios in which the subjects wore the cameras for several days and answered the authentication challenges.

We used the chest-mounted camera in this scenario.

In this study, we focus on the image-selection authentication scheme.

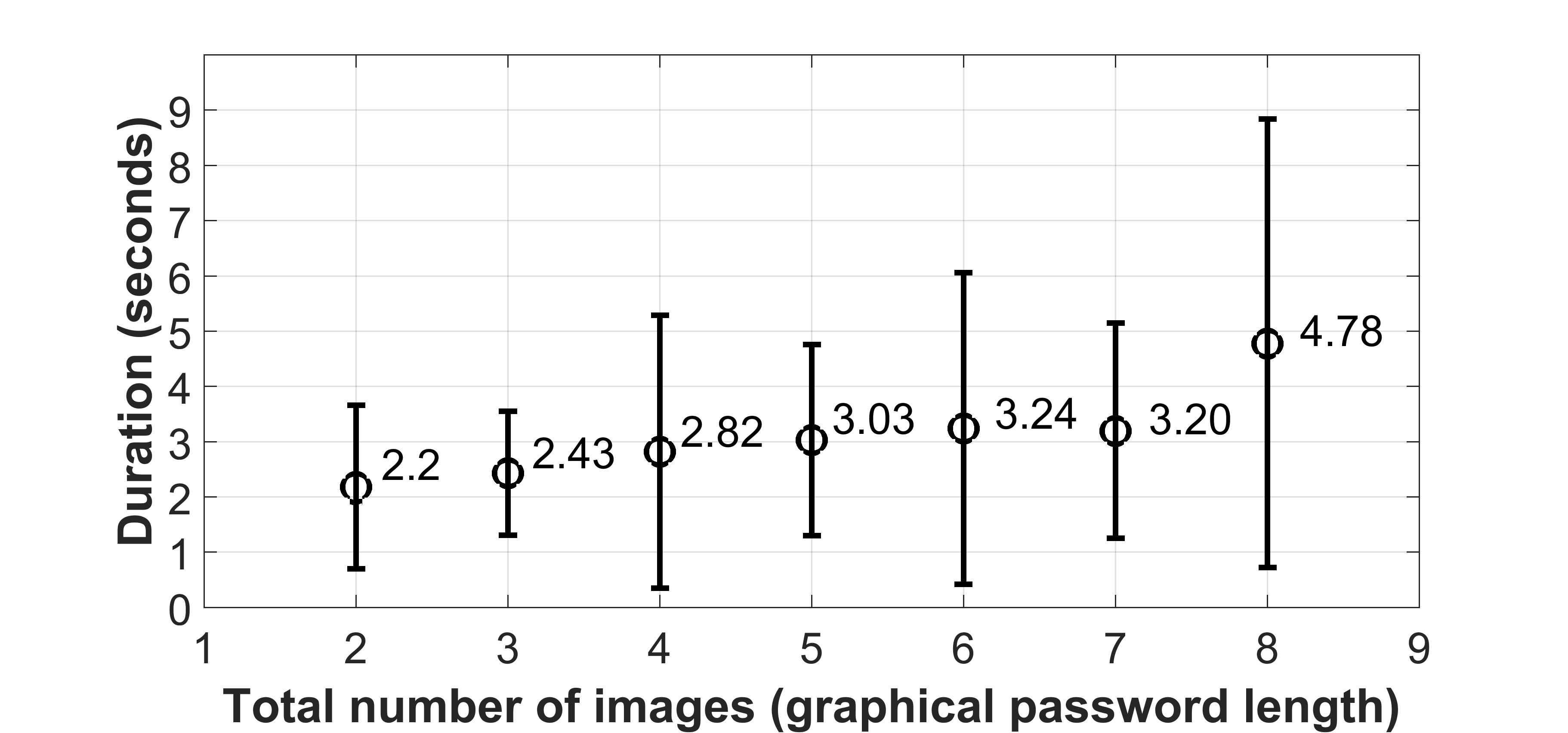

Another web-based prototype was implemented that displays from two to eight photos.

They came from videos recorded in two consecutive days.

The subject was required to pick the valid images describing events in the previous day but not in the current day.

Our system randomized both the total number of images and the quantity of valid photos and did not let the user know those parameters.

The user may select and unselect an image multiple time until achieving the correct configuration.

In the web application, clicking on an image alternates its state.

We recorded the number of clicks instead of the number of attempts.

The entry time is calculated from when the password fully appears until when the proper selection is reached.

Regardless of password length and number of valid images, the user consumes 3.10 seconds to click 5.14 times on the images on average.

Publications